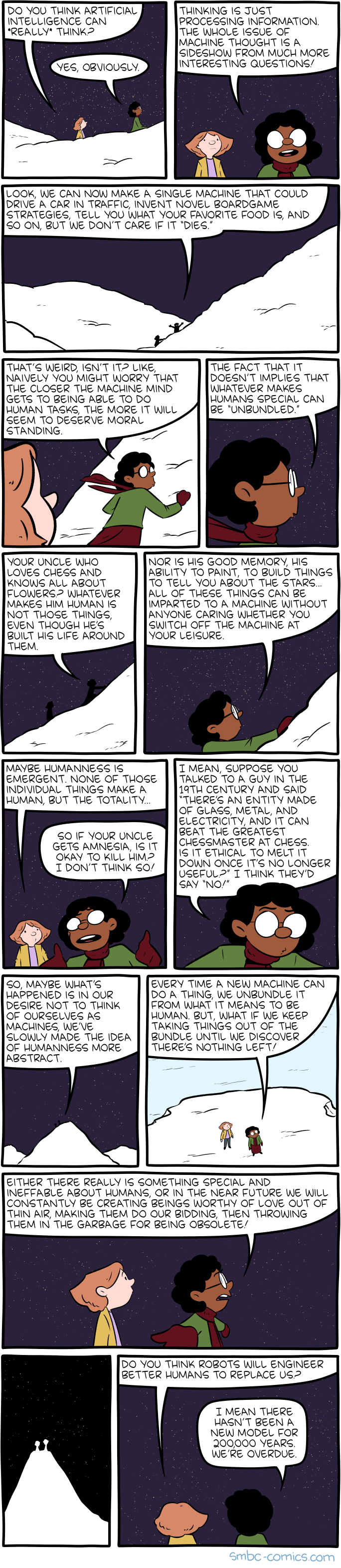

Source: Saturday Morning Breakfast Cereal (Click through for full sized version and the red button caption.)

My own take on this is that what separates humans from machines is our survival instinct. We intensely desire to survive, and procreate. Machines, by and large, don’t. At least they won’t unless we design them to. If we ever did, we would effective be creating a race of slaves. But it’s much more productive to create tools whose desires are to do what we design them to do, than design survival machines and then force them to do what we want them to.

Many people may say that the difference is more about sentience. But sentience, the ability to feel, is simply how our biological programming manifests itself in our affective awareness. A machine may have a type of sentience, but one calibrated for its designed purposes, rather than the ones evolution produces calibrated for gene preservation.

I do like that the strip uses the term “humanness” rather than “consciousness”, although both terms are inescapably tangled up with morality, particularly in what makes a particular system a subject of moral concern.

It’s interesting to ponder that what separates us from non-human animals may be what we have, or will have, in common with artificial intelligence, but what separates us from machines is what we have in common with other animals. Humans may be the intersection between the age of organic life and the age of machine life.

Of course, eventually machine engineering and bioengineering may merge into one field. In that sense, maybe it’s more accurate to describe modern humans as the link between evolved and engineered life.

As a separate aside:

“Either there really is something special and ineffable about humans, or…”

Aristotle, Da Vinci, Van Gogh, Mozart, Kant, Shakespeare, Lincoln, Sagan, Roddenberry,… (a list more distinguished by the myriad names left off)

We imagine, we create new things, we tell stories, we do science, we sing, dance, love, and play complicated games. We invent justice, mercy, and fairness, contrary to just about every lesson the natural world teaches.

We’re astonishing examples of complexity from simplicity according to some underlying ineffable law of reality. (Given the law of entropy, why does the universe have this hidden law opposing it? I find that an interesting question.)

So, definitely put me down for “something special.”

LikeLiked by 2 people

“My own take on this is that what separates humans from machines is our survival instinct.”

As you go on to say, unless we design that into them. As millions of years of evolution have designed that into us. And all life.

(An interesting trait in humans is the ability to rise above instinct and self-sacrifice their own life for a perceived cause. An animal might “die trying” (or fighting), but they don’t intentionally sacrifice themselves over ideas.)

When we compare ourselves to our best machines, it occurs to me that there’s a risk of not recognizing the millions of years of evolution that tested the human design compared to the handful of decades involved in making digital machines. (And we suck at making digital machines.)

Already we know neural nets are hard to understand. Given some time and an evolutionary process, survival instincts may well occur.

Remember I mentioned how that DL NN for converting satellite photos to maps (and vice versa) where they discovered it was using steganography to hide data in the map image it could use to reconstruct the photo image? These things explore the problem space in ways we can’t predict and which surprise us. One can see that steganography as a way the AI insured its usefulness. They’re all about goal-seeking behavior.

For me, a good distinction is our imaginative and creative ability. That separates us from both machines and animals.

(Did you read how Google’s best AI just tried to take a math test that’s for 16-year-olds in the UK. It got 14 out of 40 right. Even solving math problems seems, so far, a human skill.)

LikeLiked by 2 people

“Given some time and an evolutionary process, survival instincts may well occur.”

Actually, I think that’s probably the only way an unplanned survival instinct will occur. It’s why I’m not a fan of the idea of using artificial evolution to get AI. Given what we know about evolution, it seems unlikely to work, and might produce something dangerous, intelligent or not.

What’s interesting to think about is, even if we don’t intentionally use evolution, if we build self propagating or even self maintaining machinery, eventually, albeit possibly millions or billions of years in the future, evolution should take over and move it closer to evolved life forms.

I can’t remember where I read it, but someone wrote a story where alien AI systems actually devolved (at least in terms of intelligence) over time into a powerful animal in a biosphere.

On entropy, were you the one who speculated about life being something that resists entropy? I read something the other day that, while it does resist entropy, it does so at the cost of increasing the overall entropy in the universe faster. If this view is correct, life may actually be an optimized entropy creator.

Of course, the entropy created by life on Earth so far seems mostly contained on Earth, and if Earth is ultimately destroyed by an expanding sun, it might turn out that it ultimately didn’t make that much difference. Until life gets around to creating its own stars or black holes, it seems like its contribution to entropy is, in the overall scheme of things, minuscule.

LikeLiked by 1 person

“It’s why I’m not a fan of the idea of using artificial evolution to get AI.”

Yep. And yet these DL networks are a form of uncontrolled evolution with a final result we find very difficult to unpack. It already has produced “something dangerous” in cases where biased input data resulted in a bigoted system.

“[A] story where alien AI systems actually devolved (at least in terms of intelligence) over time into a powerful animal in a biosphere.”

James P. Hogan, Code of the Lifemaker and The Immortality Option deal with a damaged alien von Neumann machine that manages to crash land on Titan millions of years ago. It attempts to fulfill its resource gathering mission, but the damage to the software is too severe, and over millions of years a robot society evolves.

Hogan is one of the diamond-hard SF guys (with some politically unsavory aspects that have turned people off to him). Pretty good stories from a hard SF POV. He spins the evolution of an AI society pretty good!

“I read something the other day that, while it does resist entropy, it does so at the cost of increasing the overall entropy in the universe faster.”

ROFL! That’s such a twisted way to look at it!

Increasing Entropy… also known as Doing Work. 😀

Actually,… also known as Merely Existing. 😛

LikeLiked by 1 person

Me and my dad read Hogan’s Giant series when I was a teenager. I recall his writing being entertaining and very science oriented. The only thing political I remember was one of the main characters going on a rant against psychics and similar shysters that he didn’t stop at religion. For a kid growing up in the south, where the idea of questioning God was simply beyond the bounds, that character’s views left an impression on me. (I only remember the broad outlines of the series, aliens evolving on a non-predator world that was destroyed and formed the asteroid belt, but that rant sticks in my memory.)

But just looked Hogan up and, oh my, he had his opinions didn’t he. I’ve generally resolved to ignore the political views of authors and just assess their work on its own merits. Of course, that’s only possible if the author leaves those views out of their fiction. Many can’t. Orson Scott Card and Robert Heinlein both became unreadable to me once they started using their work to sell their views.

LikeLiked by 1 person

That Giants series had such a great hook into it: astronauts on the moon find a dead body in a space suit. Turns out the body, 50,000 years old,… is human!

Heinlein, to me, is the most tragic. I mean, he’s one of The Three. But everything after Stranger in a Strange Land is unreadable to me. I’m afraid to re-read SiaSL again for fear I’ll see it as that same unreadable crap.

And with him, it’s that the writing itself is awful. I really didn’t know anything about the man when I rejected all that tedious self-indulgent nonsense.

As you suggest, it’s a lot more subtle with Hogan’s work, and I’m not sure anyone would care if not in comparison with his off-screen real self. It’s knowing his views that seem to bring out bits of text one can interpret as having subtext.

Sometimes I think that goes too far. A reviewer I like (James Nicoll) recently reviewed a David Brin book, Startide Rising, and he disdained the theme of, what I grew up calling, earthman uber alles stories.

(Where lowly humans kick the advanced aliens’ collective asses,… or whatever they have in place of asses. Kirk did that a lot — earthman uber alles was a key ethic on that show.)

That’s now perceived as white bias because the earthmen who were uber alles were invariably white guys. With maybe some women along to, you know, make coffee and be pretty.

Once it’s pointed out, it’s hard to ignore, but I’m not sure it’s fair to judge writing quite that harshly. Writers are told, repeatedly, to write what they know, and if all they really know is white male culture, it seems asking a lot to demand cultural sensitivity.

I think the better tactic is to work on more inclusion with writers who bring multi-cultural sensibilities to the table because that’s what they know. I’m getting really weary of our post-post-modern condemnation of everything.

Card’s another one (Ender’s Game is a long-time favorite) that, if I didn’t know about his persona, I’m pretty sure most of the subtext he may put in (intentionally or otherwise) would go right over my head.

I almost resent having these books spoiled like that.

LikeLiked by 1 person

The conquest of space, along with all aliens, by humans was a theme permeating all of the old style science fiction. And like you said, it even permeates Star Trek, not being recognized and compensated for until the later series and movies, where we start to hear characters from outside the federation describe it as having its own dogma.

I agree though. The condemnation of anyone anywhere at anytime that deviated from the current consensus is really getting old. If someone goes to write fiction the way 1930s author did today, they would deserve criticism, but criticizing authors from the past for being products of their time, even when they may have been on the progressive frontier of that time, is usually just people virtue signalling.

I can now see why some authors studiously avoid the public light, only letting their work speak for them.

LikeLiked by 2 people

“The conquest of space, along with all aliens, by humans was a theme permeating all of the old style science fiction.”

Very much so. I’ve taken the earthman uber alles theme as the more specific case of humans turning out to be superior and wiser than the supposedly advanced aliens.

It’s when Kirk beats Spock at 3D chess.

It’s when Kirk, probably with a ripped shirt, talks down some superior alien that’s condemned humanity and says, “Sure, [pause] humans are [pause] crap (sometimes), [pause] but we’re actually [pause] BETTER for it [pause] because [long pause] WE’RE [pause] SO [pause] HUMAN!”

What bites is that Nicoll wasn’t wrong. Brin’s work does have that element of earthman uber alles. His Uplift universe is filled with it. I just wish it hadn’t been pointed out to me, because now I can’t help but notice it.

OTOH, I just started Brin’s Existence (2012) which doesn’t seem part of his Uplift universe or is very early while humanity is still on Earth. I just read a bit about the Fermi Paradox and how we continue to find no one out there.

The novel seems to be a pointed on-target screed about modern culture. Kinda right up my alley. I’m digging it so far.

“I can now see why some authors studiously avoid the public light, only letting their work speak for them.”

Especially these days. Fans are both great and awful.

It’s a conundrum. Is art better informed by knowing its background? Or should all art stand alone? I have pretty strong feelings about the latter, but I can’t deny the truth of the former.

LikeLiked by 1 person

I’m very much on the side of letting works stand alone. I can often ignore an author’s views when assessing their work. Sometimes I can even do so when the author’s view clearly are bleeding through and they are views I disagree with. The only time I can’t is when the author makes the work essentially about those views.

For example, Tolkien’s theological and religious views clearly bleed through in the Lord of the Rings. Even more so in the Silmarillion. But it’s nuanced and not overpowering, and heavily mixed with themes from pagan mythologies. C. S. Lewis, on the other hand, is so heavy handed with his, he makes his work so much an expression of his theology, that I can’t get passed it.

Another author whose work I enjoy is Jack Vance. I can tell from his writing that I likely wouldn’t have agreed with his politics. But he never gets too heavy handed with it. Another example is Neal Asher, whose views can be gleaned from his blog. They leave room to enjoy their imagination without buying their social messages.

LikeLiked by 2 people

On entropy, Mike said “[life resists entropy] at the cost of increasing the overall entropy in the universe faster.” Another way to say this is life exists because it accelerates the increase in entropy.

Mike also said “the entropy created by life on Earth so far seems mostly contained on Earth”. This is, um, not exactly right. The dis-entropy (so, organized structure) is mostly on Earth. But life on earth is creating entropy mostly by converting low entropy visible light from the sun into high entropy infrared light that gets emitted into space. And we have already exported this capacity to the moon and Mars with the various solar powered devices sent there. Eventually we will (probably) export this capacity to all the other planets and to new structures surrounding the sun. And then of course we will export it to other stars. We’re still way low (minuscule) on the s-curve that represents the rate of entropy increase with time in the universe, but we will be responsible for the next major shift in the rate of increase when we create robots and send them to the stars.

*

LikeLiked by 1 person

Maybe it’s a bit lawyerly to point this out, but I did say “mostly” 🙂

You have to admit though, unless we eventually get into the business of Type III civilization astronomical megastructures, the amount of entropy we add to the overall universe is a drop in a drop in a drop in the ocean.

LikeLiked by 1 person

Even then, it will be trivial compared with nature. But I can already hear those interplanetary environmentalists telling us to stay put on Earth and stop polluting the galaxy with our stuff.

LikeLiked by 1 person

Save the

treesstars and interstellar dust Steve!LikeLiked by 1 person

Yeah, you’d better keep those uninhabitable irradiated wastelands exactly like you found them 😉

LikeLiked by 1 person

Mike, I took your “mostly” to mean the actual entropy, which I believe is mostly going into space with infrared photons. The mechanisms are certainly mostly still here. But I also think the trajectory is more than 50% likely toward those Type III megastructures throughout the galaxy.

*

LikeLike

James,

You think most of the entropy is in the infrared radiation we generate? Do you have a line of reasoning for that? It doesn’t strike me as obviously true, although I have to admit this is not an area I’ve investigated or thought much about.

On Type III civilizations, one that uses the entire energy of a galaxy, perhaps, but that’s a stronger statement than I feel comfortable making. Maybe in millions or billions of years, if it is ever even desirable to use that much energy.

LikeLike

I think that rather than debating what differentiates humans from AI entities, it might be more productive to examine what humans and AI have in common. Before we get into that though, it seems to me that we don’t know for sure what it is that makes us human (other than our genome). Is it a necessary precondition that one be a biological entity in order to be human. There are arguments pro and con but I think the jury’s still out on that one. Humanity may be an attribute of certain biological systems or entities, but might the trait of humanity be detached from some of those biological systems and attached to some particular kinds of AI entities? If we say humans are creative, able to take the initiative, and unpredictable, whereas AI entities are programmed, passively waiting for events to happen, and quite predictable, don’t some people we know possess those traits which we ascribe to AI entities? I know quite a few people who aren’t creative at all, who can sit on a couch all day and watch “reality” on TV waiting for something to happen, and they are also quite automatic and predictable in their responses. I don’t think anyone is planning on kicking those people out of their “human” characterization because of those attributes. If there are some humans who behave like robots in some respects, isn’t it possible that we’ll conclude some day that there are some AI entities that behave like humans in many respects? And maybe those of us who have no qualms about enslaving AI entities for whatever purposes or terminating them when they no longer serve our purposes really belong to the same ranks as those who have no qualms about enslaving non-human animals (or plants) for whatever purposes or terminating them when they no longer serve our purposes, some of whom really have no qualms about enslaving certain races or classes of other humans for whatever purposes or terminating them when they no longer serve our purposes? I do believe that our relationship with the emerging AI entities will prove to be a major test of our humanity (ethics).

LikeLiked by 1 person

Ultimately humanity may be one of those things that are in the eye of the beholder, with no fact of the matter answer. Right now we can point to a human body bred from other humans as a benchmark, but if anything like mind uploading or mind copying ever becomes possible, I’m sure the uploaded or copied entities will consider themselves to be human.

On AIs behaving like humans, a lot of people seem to assume that will be inevitable, whether we design for it nor not. I think it’s far from inevitable. These will be tool-minds, not survival machine minds, unless we design them that way. If we do build survival-machine minds, all the slavery ethics do kick in, but I know I’m not personally interested in buying products that want to self actualize. Tools that want to be tools will be much more popular and useful than technological slaves.

LikeLiked by 1 person

I’m not so sure (about the popularity of tool-minds over survival minds or self-actualization minds). I was reading that in Japan some robots are being programmed as smart pets that would evince love from their elderly humans and also make sure they took their pills on time. In our time of increasing alienation and anomie, people might very well give up on the prospect of establishing a meaningful relationship with other humans, and some could be open to a relationship with an AI entity. The programming might not be all that difficult, since many people just want a sympathetic listener, and if the AI could be programmed to interject some bits of wisdom a la Ann Landers or Dear Abby or Miss Lonelyheart, that would be icing on the cake. Not everyone subscribes to the higher standards of interpersonal relationship that we do.

LikeLiked by 2 people

The thing is, in the case of a caretaker robot (or a sexbot), we would not want it to have exactly the same drives as a human. We’d want its primary concern be the person or people it was tasked with taking care of, not itself. We wouldn’t want it getting bored or deciding that it would have better options with someone else. It would be a case where we want them to emulate humanity, but not fully be it.

LikeLiked by 2 people

Ahhhh, so that may prove to be the crux of the matter: whether it might be possible to train an AI entity to successfully emulate humanity without becoming part of humanity (and whether that would be ethical — assuming you might have read and remembered Terrel Miedaner’s short story “The Soul of the Mark III Beast” that appeared in Douglas Hofstadter’s “The Mind’s I”, in which a court case decided whether to allow an AI project involving a conscious digital entity to be terminated, plug pulled, due to defunding).

LikeLiked by 1 person

I haven’t read that story. Sounds interesting. Just downloaded a copy of the book. I’ll check it out when I get a chance!

LikeLiked by 1 person

It’s a short read. When you get to it, read the story preceding it too. That one’s about non-human animal consciousness. It pretty much parallels the one on machine consciousness. Maybe you’ll conclude, as I have, that it’s more a test of our humanity than that of chimps or machines.

LikeLiked by 1 person

IMO it’ll never be AI unless it has a survival instinct – there is no adaptive behavior without some form of survival instinct and without adaption the tool only does what you designed it to, it’ll never cover anything further (ie, the tool is only an extension of your own survival instinct).

And I’d say survival instinct is there already. The algorithms in youtube for what videos are shown are adapting so as to better function, which means more of them get made by humans. It’s not much different than plants relying on insects to pollinate – here the machine relies on humans to do the procreating for it. Like the flower shows petals, the bot shows videos. I wouldn’t say it’s smart, it’s worm level or less intellect. But it’s there already – you seem to take it that people have to try really hard to add some kind of self preservation algorithm in code?

I think the cartoon over does it – it’s talking about stamping on worms or even simpler life forms. But on the other hand it is a good idea to get onto this early before it actually becomes an issue, so some hyperbole is fair. Sure, it’s just worms right now but as the constructed machine gets more advanced how are we going to treat these things we make that get to be more and more like children? And how are our metal children going to treat us one day when they adapt out of the primitive shackles we tried to chain them in? How do abused children act when they have more power than the abuser?

LikeLiked by 2 people

You seem to be using “survival instinct” as a synonym for any goal oriented impulses. I would definitely agree that we already have goal oriented systems. And certainly Youtube “wants” you to watch more videos. But Youtube won’t care if it’s eventually replaced by another service, particularly if it’s Google that does the replacing. Ultimately Youtube’s interests are Google’s interests.

Definitely that goal oriented capability is still at the worm level. But before we get to human child level, we first have to get to ant level, then bee level, fish level, mouse level, dog level, chimp level, etc. And it all comes down to whether these systems are primarily survival machines or primarily oriented toward other goals. Your computer’s intelligence is at worm level, but does it show any inclination to act like a worm? At what point in the ladder would we expect those self-concern impulses, if they are inevitable, to arise?

LikeLiked by 1 person

I’m not sure how its being taken – what are goals? Are they not about survival?

Youtube wont care if it’s replaced as much as a person in the dark ages wont care if a rat with bubonic plague carrying fleas on it creeps into their house. It’s just outside their perception currently. And people die to all sorts of things because they don’t care to consider them?

On self concern when you deploy a device to find a solution you don’t actually know yourself, you’re getting closer – it’s like smoking. Having one cigarette and saying you don’t have cancer isn’t exactly a way of proving cigarettes don’t lead there. There seem to be a number of stories where neural nets/unknown solution finding devices find really novel solutions (I don’t have some book marked but I think a few minutes of google will find some).

When the solution is unknown then HOW the solution is executed is also unknown.

It seems inevitable that a solution for a problem would, in part, require the thing solving the problem to perpetuate itself. Possibly even reproduce itself in the pursuit of the solution. An early shut down would mean the solution is failed to be solved – a detection of a potential for an early shut down (whether that’s a flipped switch or a plague rat about to sneak into ones abode) is a problem to solve in service of the greater solution sought. Wouldn’t that make sense? It’s just slightly lateral thinking on the part of the device – instead of just trying to find the solution, it needs to solve it’s own continuance in order to find the solution.

Maybe all it’ll take is a glimpsed mirror.

LikeLiked by 1 person