After the global workspace theory (GWT) post, someone asked me if I’m now down on higher order theories (HOT). It’s fair to say I’m less enthusiastic about them than I used to be. They still might describe important components of consciousness, but the stronger assertion that they provide the primary explanation now seems dubious.

A quick reminder. GWT posits that conscious content is information that has made it into a global workspace, that is, content that has won the competition and, for a short time, is globally shared throughout the brain, either exclusively or with only a few other coherently compatible concepts. It becomes conscious by all the various processes collectively having access to it and each adapting to it within their narrow scope.

HOT on the other hand, posits that conscious content is information for which a higher order thought of some type has been formed for it. In most HOTs, the higher order processing is thought to happen primarily in the prefrontal cortex.

As the paper I highlighted a while back covered, there are actually numerous theories out there under the higher order banner. But it seems like they fall into two broad camps.

In the first are versions which say that a perception is not conscious unless there is a higher order representation of that perception. Crucially in this camp, the entire conscious perception is in the higher order version. If, due to some injury or pathology, the higher order representation were to be different than the lower order one, most advocates of these theories say that its the higher order one we’d be conscious of, even if the lower order one was missing entirely.

Even prior to reading up on GWT, I had a couple of issues with this version of HOT. My first concern is that it seems computationally expensive and redundant. Why would the nervous system evolve to have formed the same imagery twice? We know neural processing is metabolically expensive. It seems unlikely evolution would have settled on such an arrangement, at least unless there was substantial value to it, which hasn’t been demonstrated yet.

It also raises an interesting question. If we can be conscious of a higher order representation without the lower order one, why then, from an explanatory strategy point of view, do we need the lower order one? In other words, why do we need the two tier system if one (the higher tier) is sufficient? Why not just have one sufficient tier, the lower order one?

The HOTs I found more plausible were in the second camp, and are often referred to as dispositional or dual content theories. In these theories, the higher order thought or representation doesn’t completely replace the lower order one. It just adds additional elements. This has the benefit of making the redundancy issue disappear. In this version, most of the conscious perception comes from the lower order representations, with the higher order ones adding feelings or judgments. This content becomes conscious by its availability to the higher order processing regions.

But this then raises another question. What about the higher order region makes it conscious? By making the region itself, the location, the crucial factor, we find ourselves skirting with Cartesian materialism, physical dualism, the idea that consciousness happens in a relatively small portion of the brain. (Other versions of this type of thinking locate consciousness in various locations such as the brainstem, thalamus, or hippocampus.)

The issue here is that we still face the same problem we had when considering the whole brain. What about the processing of that particular region makes it a conscious audience? Only, since now we’re dealing with a subset of the brain, the challenge is tougher, because it has to be solved with less substrate. (A lot less with many of the other versions. At least the prefrontal cortex in humans is relatively vast.)

We can get around this issue by positing that the higher order regions make their results available back into the global workspace, that is, by making the entire brain the audience. It’s not the higher order region itself which is conscious. Its contents become conscious by being made accessible to the vast collection of unconscious processes throughout the brain, each of which act on it in its own manner, collectively making that content conscious.

But now we’re back to consciousness involving the workspace and its audience processes. HOT has dissolved into simply being part of the overall GWT framework. In other words, we don’t need it, at least not as a theory, in and of itself, that explains consciousness.

None of this is to say higher order processing isn’t a major part of human consciousness. Michael Graziano’s attention schema theory, for instance, might well still have a role to play in providing top down control of attention, and providing our intuitive sense of how it works. The other higher order processes provide metacognition, imagination, and what Baars calls “feelings of knowing,” among many other things.

They’re just not the sole domain of consciousness. If many of them were knocked out, the resulting system would still be able to have experiences, experiences that could lay down new memories. It’s just that the experience would be simpler, less rich.

Finally, it’s worth noting that David Rosenthal, the original author of HOT, makes this point in response to Michael Graziano’s attempted synthesis of HOT, GWT, and his own attention schema theory (AST):

Graziano and colleagues see this synthesis as supporting their claim that AST, GWT, and HO theory “should not be viewed as rivals, but as partial perspectives on a deeper mechanism.” But the HO theory that figures in this synthesis only nominally resembles contemporary HO theories of consciousness. Those theories rely not on an internal model of information processing, but on our awareness of psychological states that we naturally classify as conscious. HO theories rely on what I have called (2005) the transitivity principle, which holds that a psychological state is conscious only if one is in some suitable way aware of that state.

This implies that consciousness is introspection. Admittedly, there is precedent going back to John Locke for defining consciousness as introspection. (Locke’s specific definition was “the perception of what passes in a man’s own mind”.) Doing so dramatically reduces the number of species that we consider to be conscious, perhaps down to just humans, non-infant humans to be precise. I toyed with this definition a few years ago, before deciding that it doesn’t fit most people’s intuitions. (And when it comes to definitions of consciousness, our intuitions are really all we have.)

It ignores the fact that we are often not introspecting while we’re conscious. And much of what we introspect goes on in animals (in varying degrees depending on species), or human babies for that matter, even if they themselves can’t introspect it. It also ignores the fact that if a human, through brain injury or pathology, loses the ability to introspect, but still shows an awareness of their world, we’re going to regard them as conscious.

So HOT doesn’t hold the appeal for me it did throughout much of 2019. Although new empirical results could always change that in the future.

What do you think? Am I missing benefits of HOT? Or issues with GWT?

Some Eastern philosophies, as I understand them, stress consciousness without introspection. Perhaps it’s more the potential for meta-thinking that matters?

Even so, no one will ever convince me dogs (and other higher animals) aren’t conscious, and I’m pretty certain they aren’t very introspective.

LikeLiked by 1 person

If I recall correctly, those philosophies also talk about contentless awareness, a blankness, just pure being, reached after extensive meditation. I’ve occasionally been so tired that my mind felt utterly blank, which is probably the closest I’ll ever get to it, although I’m not entirely sure I’d call the state I was in fully conscious.

Most neurobiologists see mammals and birds as conscious. Things get more contentious with reptilians, and a lot more so with fish and amphibians.

LikeLike

My fishing buddy and I have discussed it at length, and our consensus is that fish aren’t conscious. Or, if they are, their lights are very dim.

LikeLike

I’m in the very dim camp myself. There are enough studies showing that they can make value trade off decisions, which requires affects. But it’s very limited, and their version of consciousness likely doesn’t include visual or auditory content (which are handled by reflexive activity), which seems to make it pretty alien by our standards.

LikeLike

And then there are octopuses who are clearly intelligent and equally clearly highly alien.

LikeLike

Definitely. Octopuses are the main evidence that we can’t use anatomy by itself to make any determinations. Just because a creature lacks the specific anatomy that makes us conscious doesn’t mean they’re not conscious. There are always multiple paths to get the same or similar result.

LikeLiked by 1 person

Although I can’t help pointing out that the one constraint we do have is the apparent requirement for physical brains — lots of neurons. connected together in a complex network. 😉

LikeLike

Yeah, I think I’ll swim around that bait. 🙂

LikeLike

😀

LikeLike

Today’s SMBC: machine love

LikeLiked by 1 person

How appropriate! (And thanks for linking to the image. That site has gotten pretty obnoxious with ads.)

LikeLike

I figured you wouldn’t want to see the site. And if I’d just pasted the URL, WordPress would have pasted a huge graphic over the thread. (I really wish I could turn that feature off. It’s useful sometimes, but more often than not is pernicious.)

LikeLiked by 1 person

I, for one, welcome the new position of our overlord. The HOTs have always seemed too elaborate to me, and I am suspicious of the overemphasis on linguistic reports. But two of your critiques don’t seem very compelling:

“Why would the nervous system evolve to have formed the same imagery twice?”

Because the second system presumably does different things with the information, and the second copy may be changed in the process. There might be some use in retaining the original image at least temporarily. If you look at machine vision code, there are copies of image buffers being made left and right! Now, could an optimized code do away with that? Maybe, I’m not sure; that’s above my pay grade.

“If we can be conscious of a higher order representation without the lower order one, why then, from an explanatory strategy point of view, do we need the lower order one?”

This strikes me as parallel to the question, why does any computer program need subroutines? Well, if memory is in short supply, such a code structure could be efficient. Moreover, evolution is a kludgy process which is great at finding *local* optima and pretty terrible at finding global ones. Maybe it’s just easier to evolve a brain that works pretty damn well by using separate layers of computation, and maybe there’s no nice path from there to a one-level architecture.

LikeLike

Haha, overlord. If only that came with, like, power.

Definitely if someone can show some benefit to holding the image twice, then it might be plausible. But it’s worth noting that the visual cortex does a lot of analysis work. Redoing that wouldn’t make sense. We might imagine that the higher order is the final bound version, but that assumes such a binding actually happens somewhere. It seems more likely we focus on aspects of the visual percepts one at a time, or of an overall gist, all of which appear to already be provided by the occipital, posterior parietal, and temporal regions.

Just to be clear, I wasn’t advocating for a one level architecture. The visual processing in the posterior is vast, with innumerable levels. I just don’t see a reason to add another sensory processing layer in the frontal lobes. It’s a lot more efficient if the frontal regions use the work already done by the posterior regions.

All that said, evolution is a messy and ad hoc engineer. So it’s possible something like higher order perceptual representations exist. Maybe empirical evidence will force the matter. But until we have such evidence, the proposition seems dubious to me.

LikeLike

Skipping the “one level architecture” idea and just comparing N levels to N-1, I don’t think there’s a very strong reason to prefer N-1. The reason why the frontal lobes might need their own copy of some image is that they are going to do something with it, possibly distorting it, and an original copy is still needed. This is what we actually experience in some cases, where we have an objective judgment about the external world, and a subjective judgment about our qualia. I have a thought experiment (based on experience and some standard psychology of perception) about buckets of hot and cold water, which illustrates this.

LikeLike

Interesting thought experiment. But it’s worth noting that Carol’s perception would be affected by habituation in the peripheral sensory neurons in her hands. In other words, her brain would actually be getting different signals from the two, even when she sticks both hands in the same water.

I do think the frontal lobes are involved judgments about sensory stimuli. I just see it more likely to be value added functionality rather than redoing the work of the sensory cortices. But I’ll admit it’s not impossible. We know the midbrain has its own set of low resolution sensory images, separate from the cortical versions, mainly due to evolutionary history.

LikeLike

I’m confused. How do HO Theories posit “redoing the work of sensory cortex”? Can’t they just posit that the frontal cortex does value added work – after making a copy?

LikeLike

But why make a copy if the original, with numerous levels of analysis, already exists? Why not do the value added work with the existing imagery and associations, which can be accessed as needed?

LikeLike

The same reason we make a copy of an image buffer in machine vision code. Sometimes you want to access data “by value” rather than “by reference” in programmer’s jargon. Especially when you might be changing that value, and you might still need the original to be available.

LikeLike

Perhaps Global Workspace Theory is missing the ‘strange’ in Hofstadter’s strange loop, and Higher Order Theories provide this?

In GWT’s competition to be heard, the currency is not the information content itself, it is who is worth listening to, what they are talking about, how others might make use of that and what collective benefit that might bring. Isn’t that the twist in the loop that adds value? …and none of those things are trivial when you are in a global workspace that just consists of constellations of twinkling neurons.

1 is not the same as “1”!

At some point metaphors run out of steam and you need to figure out the actual mechanisms.

LikeLike

It seems like GWT provides an overall looping (or recurring or reentrant) mechanism, which can process both primary consciousness perceptions and imagination, as well as the recursive metacognition I understand Hofstadter to have been referring to with “strange loop.” (I haven’t read Hofstadter, so that understanding is very second hand.)

On the competition, I think that’s right. It only starts with the original signal. The regions that receive and respond with coherent supporting signals, if they build a large enough coalition, can reach the winning threshold. Stanislas Dehaene does point out that it’s not always an even competition though. Some regions (like the executive ones) have better connectivity hence stronger signals from the start.

Definitely all metaphors have their limits. When we get down to neural mechanics, right now it seems like Dehaene has the best account, although others are adding a lot to it.

LikeLike

Ditching HOT moves the ball in the right direction as I see it Mike, even if we’re still on opposite sides of the field. It wasn’t very long ago that consciousness speculation was banned from neuroscience, and I think I know why. Neuroscientists shouldn’t have wanted the joke that is consciousness studies to infect their still pristine field. But that couldn’t last given that brains do seem to create conscious entities. Thus we now have people pulling ideas right out of their asses, like HOT, GWT, AST, theory of constructed emotion… and then framing them in terms of neuroscience in order to gain credence. I consider this exactly backwards. What we need are ideas that work at the level of psychology, independent of any neuroscience, and then if successful enough such ideas should help frame conceptions of brain architecture for neuroscientists to use. My own “dual computers” model takes this approach. Psychology supervenes upon neuroscience, and yet theorists instead become famous by going the other way.

Anyway to help kill HOT, here’s a thought which I recently proposed over at Broad Speculations:

As I understand it HOT theorists say that non-infant humans can be conscious, and perhaps some other varieties of primate, though little else. So if it doesn’t feel like anything to be a dog, then why do dogs behave as if existence can feel bad or good to them? Well perhaps such non-conscious behavior has been adaptive. Thus if you have a dog that needs foot surgery, don’t expect it to permit this sort of thing without tremendous struggle and horribly pitiful howling! If it feels no pain however, then why would the injection of a substance which numbs a human body part, also pacify one of these biological robots? This is either one hell of a coincidence, or dogs can be just as conscious as humans can be.

LikeLiked by 1 person

Eric,

“Ditching HOT” is probably too strong a phrase. I found some versions of it enticing, but largely realized that those versions really amounted to the higher order components of GWT. But I keep the door open to revisiting them if empirical data points there.

A lot of neuroscientists still stay away from consciousness in and of itself. They might research many of its components or related functionality, such as selective attention, while staying away from the c-word. A typical neuroscience or even cognitive neuroscience textbook usually only contains a few pages on consciousness itself.

But it’s worth noting that Bernard Baars’ background is in psychology, and GWT started out as a psychological theory, although later expanded into neurobiology. Stanislas Dehaene’s global neuronal workspace theory is essentially the neuroscience version of it. And most research (as opposed to clinical) psychologists today know at least some neuroscience.

BTW, have you considered the possibility that your second computer might be the global workspace? Just a thought.

Not all HOT proponents think only humans are conscious, although some certainly seem to. It is possible to imagine versions of HOT that allow for widespread consciousness, but admittedly they don’t seem to be the ones the main proponents of HOT champion.

We have to be careful in judging animal mental states by their behavior. It’s too easy to project our own experiences onto them. Even Feinberg and Mallatt, who have a pretty liberal conception of consciousness, raise cautions about this, based on the behavior of decerebrated animals.

That said, dogs do seem to show all the signs of sensory and affective consciousness. It’s in fish, amphibians, and insects where the evidence for affective consciousness is more tenuous.

LikeLiked by 1 person

Mike,

If GWT started out as a psychological theory, then I’d like to hear that side of it. What does it practically say about people beyond what happens inside their heads?

On my second computer being the global workspace, that wouldn’t be the right way to phrase it. As I model it some brains are able to produce a non-brain sentient entities (somewhat as lightbulbs can produce light), and this non-brain entity is something that the brain takes feedback from for its function. So the non-brain sentient entity decides to jump, for example, and the brain senses this desire and tends to comply.

Does the proposed global wide brain sharing of information create a non-brain sentient entity? I guess that’s theoretically possible, though it sure sounds made up. More importantly I’d like proponents to state their theory this way if that is what they mean, as well as propose any of the specifics of my own psychology based brain architecture components.

On disproving the worst of the HOTers, and I think thereby implicating them all, consider my observation once again. If dogs are not conscious, though the same injected substances which are numbing in the human also seem to affect the dog this way, wouldn’t this be an amazing coincidence? In that case there would be nothing for the drugs to numb in dogs, and yet for some unknown reason the instinct of these animals to merely appear in pain, would cease as well by means of these very same drugs! The chances of this would have to be astronomical. It seems to me that people aren’t considering the practical implications of all these zany neuroscience based proposals.

LikeLiked by 1 person

Eric,

“What does it practically say about people beyond what happens inside their heads?”

I’m not sure it necessarily says anything about that. By “psychological”, I meant it was reportedly discussed in cognitive terms, without reference to neuroscience. It was agnostic about the underlying implementation. As neuroscience data came in, it was extended and adjusted to fit the evidence while still retaining the basic idea. It’s a fairly simple architecture, at least at a conceptual level, which is why so many people have been able to work with it and come up with variations.

“Does the proposed global wide brain sharing of information create a non-brain sentient entity?”

Physically separate from the brain? No. Or it might work if you meant that in some emergent information architecture sense, but that puts us in straight information processing territory, which I know you’d reject. Oh well. I was worth checking.

On dogs, that doesn’t implicate all HOT theories, since dogs could have some simplified version of higher order processing; they do have their own frontal lobes. I suspect the advocates of exclusionary versions would point out that the drugs inhibit nociceptive signaling, which can suppress reactions that are only reflexive or habitual defensive ones. But it’s a tough argument to make for mammals.

LikeLiked by 1 person

Mike,

You must be right that exclusionary HOTers will have all sorts of ways to get out of the implications of their ideas. This ain’t their first rodeo. Fortunately I’m also able to challenge their perspective by means of the merits of my psychology based brain architecture.

I do appreciate you volunteering ways to potentially join our perspectives. But you’re right that I don’t consider phenomenal experience to exist as information alone. This is to say that I don’t believe that processed brain information in itself produces foot itchiness for example, but rather animates mechanisms which create an experiencer that feels such itchiness. This could exist by means of EM waves or something else. I like to think of it in terms of computer processing animating a computer screen — there’s a vast assortment of things that can be phenomenally experienced by a human, and indeed, far greater than the diversity of what can be displayed on a computer screen! Thus the machine that the human brain animates to produce its phenomenal experience, should be amazingly expansive.

But rather than reject GWT for positing phenomenal experience as the unique element which information processing is able to produce sans mechanisms (which to me seems dualistic), I’ll set this concern aside. Maybe I’m wrong about that. And note that perhaps under this context you could better grasp my psychology based model? You’ve already mentioned not knowing the psychological implications of GWT. I’ll now try to fill in some blanks — that is if GWT happens to provide an effective description of how phenomenal experience arises in conscious forms of life.

The essential feature of the emergent information based entity (which I guess here is created when information becomes globally shared in the brain), would be a sentience input, such as something which feels painful, itchy, wonderful, and things like this. Without such a dynamic there should be no fuel which drives the conscious form of function (or the second form of computer which should do less than 1000th of one percent as many calculations as the non-conscious brain which produces it).

Beyond the basic fuel element, phenomena can also be informational. For example a painful finger thus provides me with location information. Furthermore the phenomena of vision provides all sorts of light based information. Then there is the phenomenal input of memory, or past consciousness that somewhat remains given the far greater propensity for fired neurons to thus fire again.

This emergent information based entity will have affect based motivation to teleologically interpret these three forms of input and construct scenarios about how to make itself feel better from moment to moment. The brain is not only the source of all this, but takes feedback from various things that this distinct entity “thinks”. Not only will associated memories be provided, but the brain will tend to operate the muscles that this entity chooses to. With this model I believe that I’m better able to grasp human and other life based function, or a fundamental sort of theory which our mental and behavioral sciences have continually failed to realize from the days of Freud to the present.

This is a lower order theory of consciousness in the sense that phenomena should have serendipitously emerged before it was functional, and then evolved functionality given the challenges that organisms faced under more open environments. Note that higher order representations would eventually emerge, though very late in the game.

LikeLiked by 1 person

Eric,

On GWT providing an explanation for phenomenal experience, it depends on whether you regard an explanation of access consciousness (called “conscious access” by Dehaene) as sufficient. GWT simply sees phenomenality as access from the inside, the same thing seen from different perspectives.

I’m not sure how well the GWT model and yours match up. Let’s discuss a couple of scenarios. In one, I’m outside doing something, and a flea lands on my arm, which I absent mindedly swipe at. The sensation makes it to my somatosensory cortex, then to the basal ganglia, where a habitual reaction is generated. But the flea information isn’t strong enough to break through into the workspace, into consciousness.

Then I see, a large angry dog coming at me. This signal is able to quickly build a coalition and dominate the workspace, which is to say, it’s broadcast around the brain. That invokes various affect producing regions which add them into the workspace, giving the experience a particular feel.

In GWT, “virtually any brain region”, per Dehaene, can get its content into the workspace, and virtually any region can fail to. Meaning that processing in any reason can be unconscious or conscious. No region is by itself conscious, but any region can achieve it. It’s like fame in a society. No person by themselves can achieve it, but any person might find themselves famous if enough people take an interest in them, and their chances of gaining fame or a lot higher if a major news source (hub) notices them.

So with GWT, the emergent conscious entity is the community of processes, exteroceptive, interoceptive, affective, planning, introspective, memory systems, etc, serially reacting to a series of globally broadcasted pieces of information from within that community. The community is definitely affected by reward or punishment mechanisms, motivating the overall system to do things. And GWT is a first order theory, since sensory regions can and do win the competition for the workspace.

Do you see consilience here? Or are any differences irreconcilable?

LikeLiked by 1 person

Mike,

The only non-negotiable element of my model, is its dual computer nature. The non-conscious computer exists as the brain, and it functions on the basis of electrochemical dynamics as modern science understands it. This machine effectively creates the conscious form of computer which functions by means of something that psychologists haven’t yet formally accepted, or affective states. I don’t know how it is that brains create affective states, but if a random butt itch becomes conscious to me because it achieves global broadcasting, well that’s mostly fine with me. (I say “mostly” because I’m still suspicious that information alone can possess such causal dynamics, though I’m also able to disregard this concern in a given context.)

Does it bother me to say that the irritating component of that itchy feeling (or the phenomenal rather than informational component) is simply access to this situation from the inside? A bit, though I could acquiesce for a person who is able to acknowledge that this irritation component exists as motivation which drives the conscious form of function. Hopefully you do. It sounds to me like GWT doesn’t have very much worked out on the psychology front yet, so I’d love to provide its followers with my own such account.

On automatically swiping a flee off your arm, I have a somewhat more involved answer. Over time there are certain things that your conscious function should teach your not conscious brain to do under the proper conditions, such as knock a bug off your arm. If my face itches then I probably won’t need to decide to scratch it — by this point my brain should have learned how it is that I handle such situations and so take care of it automatically. And if GWT proponents suspect that the itch itself is caused by a global wide brain broadcasting, then that’s fine with me.

On the angry dog charging, what’s felt here could be both primal as well as learned. Regardless there should be both non-conscious reactions to such input information, like increased heart rate and an automatic tendency to flee, as well as conscious interpretation and construction of scenarios about what to do.

Fame isn’t too bad an analogy for consciousness under my model. No person is famous in and of themselves, but rather by means of something else — being known generally. At any rate I consider the brain to produce consciousness rather than to exist that way. If global brain broadcasting is essentially a popular account of how conscious inputs are produced by the brain, I could go along with that to potentially help complete the model.

LikeLike

Eric,

Well, that seems promising. I suspect there will be devils in the details, but maybe we’re working our way closer to a common view.

“though I could acquiesce for a person who is able to acknowledge that this irritation component exists as motivation which drives the conscious form of function. Hopefully you do.”

My views are more complicated. But I agree that affects are a major component of consciousness.

“It sounds to me like GWT doesn’t have very much worked out on the psychology front yet”

It probably has more than I know about. But a lot of people are extending it in various ways, so there’s lots of room for efforts at theoretical enhancement.

“Over time there are certain things that your conscious function should teach your not conscious brain to do under the proper conditions, such as knock a bug off your arm.”

At a certain level of organization, I think that’s right. The GWT version would be at a lower level. When a new stimulus comes in, the sensory regions send out signals. Because it’s new and novel, it excites several other regions, which bring it to the threshold leading it to being broadcast and involving all regions necessary for deliberation. But as the signal becomes routine, many of the regions, particularly those not effected by it, become habituated, and the signal no longer crosses the threshold. But by that time, the sensory and motor regions have formed adaptive firing patterns and it is handled without involving all the other regions. Same account, but at a different level.

“Fame isn’t too bad an analogy for consciousness under my model.”

Fame is a good analogy, but Dennett, who came up with it, notes that a better one might be clout. Fame gives someone a lot of clout, a lot of ability to have causal effects. That’s really what makes the difference in the brain. Something that can be processed without large scale causal effects remains nonconscious. But something that can’t, but excites enough processes, achieve fame in the brain, and then clout in the brain.

LikeLiked by 1 person

Mike,

Though I would love for us to achieve general agreement, first I seek something far more basic. I’d like you to gain a working level grasp of my ideas. Thus you could tell me the sorts of things that I’d say about a wide range of issues. With such an understanding I suspect that you could provide far more valuable analysis of my models. This might result in effective improvements that seem sensible, or you might even decide that the whole thing is crap. Either way it’s a practical level understanding that I first seek. Then from this position, if you decide that this seems generally valuable, that would be great!

I’ve been developing my ideas since I was a teen, and though various elements have gotten pretty elaborate, the theme has always remained the same. This is to say that we’re all affect interested products of our circumstances.

It’s not exactly that I’m on board with clout in the brain causing conscious function. As you know I’m already looking the other way on the information processing sans mechanisms thing. But in truth the way that brains produce consciousness is not a huge concern to me. It’s the “What?” of consciousness that I consider important. So if you’re happy with GWT right now, then I’d like to add another layer to it for you. If your next interest instead favors HOTs, I’m afraid that this opportunity would be lost! My ideas are quite basic.

What I’ve done is define affects as consciousness itself. It’s not “true”, though I have found this definition useful. If something is experiencing pain, is it ever useful to say that it isn’t conscious? I don’t think so — pain is necessarily defined as a phenomenal dynamic. Without consciousness then how could this be experienced? My concession is that there needn’t always be “functional” consciousness. Something could suffer just for the hell of it, or without any capacity for the experience to motivate it in effective ways.

Where this view seems more challenged is what might be considered perfect numbness, or perfect apathy. We can imagine vision for example, and thus being conscious, and yet having absolutely no concern about such information — consciousness without affect. I suspect however that with perfect apathy, those conscious visual signals would fade to nothing. No one would be home. (I guess you could say that the global broadcasting ceases.) It can be challenging to believe a perfect void in affect “turns the lights off” however, so I may need some adjustments on this in the end.

LikeLiked by 1 person

Eric,

“But in truth the way that brains produce consciousness is not a huge concern to me. It’s the “What?” of consciousness that I consider important.”

From our early discussions, it seemed like your real concern is the moral philosophy and sociological aspects. I’m not uninterested in that, but to me it’s a very different question than consciousness itself.

“So if you’re happy with GWT right now, then I’d like to add another layer to it for you. If your next interest instead favors HOTs, I’m afraid that this opportunity would be lost!”

No guarantees! 🙂 I go where the evidence, as I understand it, leads me. Right now that’s toward GWT, but if a ton of evidence favoring HOT came out, I’d revisit it. (Or IIT, or any other theory.)

On affects, I understand the role you’ve given it. And given that one of the most common questions about consciousness is why it feels like something to have an experience, it fits with a major intuition about it.

I’m don’t know if there are any cases of brain trauma that extinguish all affect while retaining consciousness. But apparently damage to the orbitofrontal complex or anterior cingulate cortex can dramatically reduce affect while still leaving the person aware of their environment. They can still react reflexively or habitually (while not inhibiting those reactions when they should), still lay down memories of what they’re experiencing, etc.

That’s why I usually fall back on hierarchies. Consciousness seems to defy simple definitions.

Good to know you’re open to making adjustments. It’s something we all have to be prepared to do.

LikeLiked by 1 person

Mike,

I can see why you’d think that my ideas concern moral philosophy, given the various welfare topics that I get into. People generally frame these topics in terms of right and wrong behavior, or a society dependent human construct. My own ideas however are instead based entirely upon affect itself, and so are neither moral nor immoral — they’re amoral. I believe that the better something feels, whether for an individual or a society, the better existence will be for it.

The reason that this position has not yet become established in the field of psychology, I think, is because the social tool of morality stands in opposition — there is incentive for us to publicly support altruism and deny hedonism. So even though psychologists are suppose to explore human dynamics beyond moral notions, this position isn’t yet on the table. Thus it’s unsurprising to me that the field has not achieved an effective general theory regarding our nature so far. I’d like for others to assess my own such model, though helping them grasp it has been challenging.

Regardless, my ideas definitely do concern the “What?” of consciousness. Your hierarchy may be helpful today, though once psychology sorts these sorts of issues out (and this may depend upon a community with accepted understandings in philosophy), a standard conception for the term should become established. The human should not forever fail to reduce its nature down to graspable ideas.

On that brain stuff, yeah my model suggests that decreased affect should have such effects. But how might we demonstrate that a person is able to consciously “see”, for example, and yet be reasonably sure of a perfect void in affect? Perhaps only with affect detecting sensors, or something way beyond us today. Currently the best we might do to check my model here is see if it’s effective to say that greater and lesser levels of consciousness seems correlated with greater and lesser levels of affect.

LikeLiked by 1 person

Eric,

“But how might we demonstrate that a person is able to consciously “see”, for example, and yet be reasonably sure of a perfect void in affect?”

Something similar to the tests used to detect affects in animals might work, looking for global nonreflexive operant learning, value trade off behavior, self delivery of analgesics, etc. Of course, ethical considerations constrains the way those tests could be conducted on humans, but I’m sure someone could come up with something.

And that assumes affects are part of a functional sequence. If your theory of nonfunctional affect is true, then those test wouldn’t work. But then, I’m not sure how we establish whether anything is lacking affects.

The broader issue though is that so many brain systems would have to be knocked out, anterior cingulate, ventral prefrontal cortex, and maybe the insula and some other regions, that a lot of other systems would also have been devastated. I remember cases where people’s entire frontal lobes had temporarily been knocked out. I might see if I can dig it up and see if there were any noted affect effects.

LikeLiked by 1 person

Mike,

I do appreciate you thinking about how to test my model. That’s the sort of thing which could convert your lecture level grasp into a working level understanding. And you’d provide a different perspective than my own since neuroscience isn’t something that I know much about, so your take could be quite helpful.

You do seem to grasp the challenges of using a “brain lesions” approach to this question, that is if affect doesn’t require functionality in order to exist. Even if we knock out something that stops a given functional trait, the affect itself might remain. Memory would be evidence of this though. I think you’re right that things like nonreflexive operant learning, value trade off behavior, self delivery of analgesics could provide helpful evidence in the end.

One way to potentially exploring this question in the human concerns anesthesia. I suppose there are various ways of causing it, but does one of them knock a person out by effectively preventing affect of any kind (whether informational or mechanical)? That is of course the point of anesthesia — we don’t want subjects to feel pain when they’re cut open and worked on. It could be that also losing all sensory information (as my own model predicts), is a side effect of removing the fuel which drives this second form of computer.

I consider sleep to exist as an altered state of consciousness, or degraded form. Of course dreams provide phenomena which we’re able to remember somewhat, though this phenomena is compromised. But what about dreamless sleep? Could moments of this exist with absolutely no affect, and thus no consciousness as I define it? Perhaps. But I’m also not sure how we’d establish when no affect exists, or at least today. In the end we’ll need experimentally successful models of what incites it (whether mechanical or purely informational), and then need to check for associated correlations.

LikeLike

Eric,

On complete apathy, the condition I was thinking of that might be relevant is akinetic mutism. It describes a complete lack of activity from a patient, despite showing signs of alertness, not because they are paralyzed, but because they simply have no will.

I’ve tried to find that Damasio and Van Hoesen source; unfortunately it doesn’t appear to be online anywhere. But the “lacked the motivation to respond” part implies a lack of affect to me. It sounds like they had sensory experiences, but no affects to spur any kind of action.

Another similar one is Athymhormic syndrome: https://en.wikipedia.org/wiki/Athymhormic_syndrome

Akinetic mutism sounds like the more severe version of these, although they both seem to imply sensory awareness without much, if any affect. (It is worth mentioning that these people are profoundly impaired though. No Star Trek Vulcans here.)

LikeLiked by 1 person

Thanks Mike, that’s helpful. The akinetic mutism sounds most interesting here, since in the Athymhormic syndrome patients could do complex tasks when commanded. So they seem impaired more generally.

On akinetic mutism, if my model is right then I’d expect just enough affect to incite conscious awareness and associated eye movement, though generally not enough for more obvious and interactive behavior. But instead of just talking to such a patient, if a doctor were to methodically demonstrate what it is that hammer strikes do to things, would there be any flinching or speech if it were implied that the patient’s hand would provide the next such demonstration? Then of course even more telling regarding affect would be to actually strike the hand (not that science is permitted go that far).

Another way to take this would be to somehow portray that horrible things would be done to a loved one. If the patient could follow along by means of eye movements and such with portrayals that horrible things were being done to this person, and perhaps even with corresponding blood curdling screams, but remain perfectly apathetic, then to me this would at least suggest conscious awareness with no affect. Here it would need to be established that the person does or has cared for others. (Apparently there are certain functional people in society who feel no sympathy for others at all.)

A different explanation would be that the eye movement is a non-conscious reaction, though this wouldn’t explain memory of feeling apathetic later.

From the wiki article, speaking in monosyllables merely suggests highly reduced affect based consciousness to me. Remembering that there was a will to move with a command, but then a counter will stopped it, does suggests affect motivation. I realize that there are all sorts of varieties of patients here, apparently each with their own flavor of function. But if researchers were testing my theory, would they find a patient that did seem to possess sensory awareness, but with a perfect void in affect? Or would my model be generally validated? That’s what I’d like to know.

LikeLiked by 1 person

Eric,

“if a doctor were to methodically demonstrate what it is that hammer strikes do to things, would there be any flinching or speech if it were implied that the patient’s hand would provide the next such demonstration?”

The big confound here would be reflexive reactions. If the midbrain region were still functional, the person could flinch and perhaps even utter cries without necessarily any conscious experience of pain.

And before anyone talks about brainstem theories of consciousness, there’s this from that paper I linked to above. https://www.jneurosci.org/content/37/40/9603

“A different explanation would be that the eye movement is a non-conscious reaction, though this wouldn’t explain memory of feeling apathetic later.”

I agree that the eye movement by itself isn’t indicative, since that too can be driven by a functioning midbrain. But remembering it requires functional cortical sensory regions and a hippocampus. Of course, it’s possible they weren’t conscious in the event itself, only when they retrieved the memory, but that risks getting into solipsistic territory where I can’t be sure I’ve ever been conscious in my life before the current instant.

It does raise the question of what are the necessary and sufficient capabilities to regard something as conscious. Your answer is affect. My answer is that there isn’t a fact of the matter, and we have to look at the hierarchy and decide where we philosophically want to draw the line.

LikeLiked by 1 person

Right Mike, there are all sorts of non-conscious reactions that aren’t consciously done, such as flinching. I’m not going to preserve my model in a given situation simply by means of that however. Let’s take this deeper to see if I can help you gain more than a lecture level grasp of my model.

The theory is that the entire brain is not conscious (and thus essentially functions like a computer that animates a robot), though the human brain can also produce a consciousness dynamic. This is not “brain” itself, whether higher, middle, or lower, but rather is produced by brain. (You can go “global workspace” here if you like, while I suspect that associated mechanisms exist.) Affect is theorized as the motivation which drives this second form of function, aided by informational senses and memory. So if affect is missing, I’m saying that there shouldn’t be anything like “color” for the human, just as there shouldn’t be for one of our robots. What we’d be testing for is if there actually is visual information perceived by these patients, such as color, though with absolutely no affect.

If we sat such a patient down at a table and smashed things with a hammer, and perhaps even the fake hand of the experimenter, apparently these patients are able to attend with eye movements at least. When the patient’s hand is placed on the table to apparently be next, I suspect that most would then have enough motivation to take their hand away. This would validate my model given the display of concern. Then there would be patients who leave their hand there. Though any flinching would not be consciously done, grasping this situation well enough to flinch, should display consciousness. (Otherwise the brain would need specific algorithms to address this particular situation given eye based information, essentially like one of our robots would given camera information.) So I will take the situation here as a sign of sensory awareness, even given non-conscious flinching and such. But the question would remain, could “non-robotic” sensory information occur with absolutely no affect in the patient? Could the hammer fall, perhaps missing, while an attending patient is not even incited to flinch?

If researchers had my model at their disposal, then they could design experiments around it to see if it fails anywhere. That’s what I’m after. (I still like the sympathy test with blood curdling screams from a loved one.) I suspect that these patients only display sensory awareness because just enough affect exists to provide it. Experimentation would be required to validate this, though first I’ll need to help others gain a functional grasp of my model.

I agree with you that there is no “true” definition for consciousness, only more and less useful ones in a given context. But I believe that science both can and must develop a generally accepted definition for this term, just as it has for many others. A useful one should help our mental and behavioral sciences finally begin hardening up.

LikeLiked by 1 person

Eric,

“Could the hammer fall, perhaps missing, while an attending patient is not even incited to flinch?”

I might see the argument that if the patient sees the hammer about to fall and flinches or takes action, it would indicate affect. On the other hand, if they flinch because of the loud noise of the strike, the vibration of the table, or the air displacement leading to somatic stimulation, then it might still be reflexive.

Another quote that might be relevant:

On affects driving consciousness, I wonder if you’re conflating a couple of things here. Affect is usually regarded as the experience of feeling good or bad. In that sense, it’s a part of consciousness. Saying that it drives consciousness may be circular.

But affects, I think, come from underlying reflexes and action patterns, which themselves are driven by what Damasio calls biological value, actions adaptive toward helping an organism maintain homeostasis, reproduction, etc. I’m wondering if these preconscious impulses aren’t what you really mean when you talk about something driving consciousness.

It’s not a perfect fit, because many of these impulses don’t necessarily result in conscious content, but the major ones do. And since these arise from brainstem and subcortical regions, it seems impossible there could be consciousness without any of them being there. Just a thought. (Of course, if you subscribe to Jaak Panksepp’s view, then all these lower level impulses are conscious ones, in which case it’s all the same.)

LikeLiked by 1 person

Actually Mike, I wasn’t disputing the non-conscious nature of flinching, and whether the person flinches before or after the hammer strike. These are all automatic reactions. It’s what incites them that I was referring to. Jumping at a loud noise, for example, requires the conscious phenomena of “a loud noise”. If someone convincingly plays like they’re going to punch you in the face, you should find it very difficult to not flinch even when you’re sure that they won’t. So for the human there seem to be conscious circumstances which incite non-conscious reactions. That’s what I’m talking about — are these people conscious enough to incite non-conscious algorithms associated with things like flinching? Apparently so. And does the inciting consciousness occur with absolutely no affect? That would conflict with my model so I’d like this checked out.

On this new quote, note that if a person is later able to remember what happened, but recalls feeling so lethargic that they wouldn’t respond, this doesn’t inherently contradict my model. Clearly we’re talking about reduced affect. But can it be established that such patients sometimes have absolutely no affect while experiencing existence, and perhaps even remember such existence once recovered? In that case my model would need to be altered or abandoned. I suspect that there is enough affect here to produce a conscious entity, but not generally enough to motivate the person to interact with others. Perhaps if sufficiently threatened these subjects would display their affective motivation however. Note that we can’t cause one of our robots to consciously interact with us by “threatening” it!

Well couldn’t affect be the part of functional consciousness that drives it? For example I consider electricity to be the part of our computers which powers their function. Similarly I identify affect as the part of consciousness that powers functional forms of consciousness. Non-functional consciousness, or the physics of affect which hasn’t been incorporated into an effective system, might exist in nature as well, just as non-functional electricity exists in nature. In terms of affect, here something would feel good/bad just for the hell of it.

I wouldn’t call that an effective description of my model. What you’re presenting might end up becoming validated some day, but I’d like you to grasp how these accounts differ so that you might gain a working level grasp of my possibly incorrect model. It seems to me that you’ve presented things with reflexes from which to survive that thus become affects from which to survive.

Either way, consider my model. We begin with a brain that’s entirely reflexive. It takes input information and processes this for output function through various mechanisms, just as our computers do. Our computers are powered by means of electricity, while brains are powered by means of the electrochemical dynamics of neurons. But just as our non-conscious robots have problems under more open environments, organisms that function by means of non-conscious brains should have problems as well. Here you can say “Yes, but that’s where non-consciousness can turn into consciousness. Affects come from underlying reflexes and action planners”. Well perhaps, though to me this account seems far too convenient. Instead of such a single computer account, consider how my dual computer model takes the narrative.

The theory is that all sorts of non-conscious organisms, probably in the ocean a half billion years ago, weren’t able to do all that well when environments became too open for “if…then…else” programming. But apparently there are certain things which brains can do that create an otherwise non-existent entity with affect. I don’t know the physics of it, but I presume that this physics is occurring in my own brain right now.

Initially this experiencing entity would have essentially been taken along for the ride, though occasionally organisms would serendipitously give the experiencing entity a say in what’s to be done. Generally these mutations should have failed because the experiencer would feel better providing non-adaptive guidance, and so end the line. But some must have been motivated to desire function which turned out to be adaptive under the more open situations that couldn’t effectively be programmed for (such as body damage would bring pain). Thus this affect based computer (which is produced by the brain, though isn’t brain any more than light is a lightbulb), theoretically evolved into even you and I.

Do you see how this inconvenient “dual computers” model differs from what you’ve presented? I’m not saying that you can’t add softwarism and/or global workspace theory to it as an account for affect. That’s fine. But I am saying that my model is not consistent with the idea that there is just one form of computation here, since reflexes spontaneously create associated affects. From my model consciousness is a very different dynamic which brains create given the need for teleological function, and so must be respected as a product of brain rather than just brain itself.

LikeLiked by 1 person

Eric,

“Jumping at a loud noise, for example, requires the conscious phenomena of “a loud noise”.”

Well, that depends on which definition of consciousness you’re using. Remember the hierarchy:

1. Reflexes and automatic actions

2. Perceptions, bottom up attention

3. Affect driven action selection, top down attention

4. Imaginative deliberation

5. Introspection

Responding reflexively to a loud noise falls under 2. But your affect requirement means that consciousness doesn’t kick in until 3. Of course, you could argue that the non-functional affect you posit has to be there for 2 to work, but now we’re dealing with an untestable proposition. We can build a robot that can do 1 and 2 without any signs of affect. But accomplishing 3 requires affect, or some functional equivalent.

“I suspect that there is enough affect here to produce a conscious entity, but not generally enough to motivate the person to interact with others.”

Maybe so. If I were in your shoes, I might seek out research details on this condition. There will never be evidence for no affect, only a lack of evidence for it.

“Well couldn’t affect be the part of functional consciousness that drives it? For example I consider electricity to be the part of our computers which powers their function.”

The problem is that electricity can exist without a computer. Can affect exist without consciousness? If so, then it seems similar to the reflexes I was talking about. Or do you consider “affect” and “consciousness” synonymous? If so, then wouldn’t that mean consciousness is driving consciousness?

“Well perhaps, though to me this account seems far too convenient.”

Usually explanatory fruitfulness is seen as a positive.

“Do you see how this inconvenient “dual computers” model differs from what you’ve presented? ”

Well, if you’re now back to saying the second computer isn’t an emergent phenomenon, but some kind of actual physical product, then yes, it’s not compatible with GWT, since GWT only requires the brain itself. But now we’re back to all my old objections. 🙂

LikeLiked by 1 person

Actually I’d alter your hierarchy Mike. First I’d take the “reflexes” out of this consciousness account (which I believe others have suggested as well). Only panpsychists need that one though you and I certainly aren’t panpsychists. Why support a position that you don’t believe? “Flinching” would go here, though I think the term is generally meant to address non-conscious function which is incited by means of conscious inputs. Robots don’t “flinch”. Furthermore we could note that while reflexes display non-conscious output, there will also be non-conscious input and processing elements. Theoretically the output of a non-conscious machine could serve as input to a conscious machine, as in the first of the consciousness hierarchy below.

The first component that I’d put in a consciousness hierarchy would be affect. For example, if the physics here is such that written symbols on paper can be converted into other symbols on paper which thus creates an entity which experiences what you and I know of as “thumb pain”, then that would yield a non functional conscious entity. Here it would feel this simply because the associated processing is somehow enacted. (I’m including softwarism here for rhetorical purposes, as you can see 🙂 )

The second would be an informational component to the affect experiencing entity, or conscious perceptions. It’s not what feels bad about thumb pain, but the part that provides the location of the issue for example. I don’t consider affect and perception to provide functional consciousness in themselves however, though consciousness nonetheless.

Number three would be the input of past consciousness that remains, or memory. This probably exists because neurons that have fired in the past tend to fire again. Still no functional consciousness should exist though.

Number four would be the processing element that I call “thought”. Inputs would be interpreted for scenarios to be constructed about what to “do”. Your imaginative and introspection classifications could be placed here for whatever happens to be able to do this sort of thing. And even still I wouldn’t say that this entity should display functional consciousness.

It’s the fifth classification in this hierarchy that I consider required for functional consciousness (though functional consciousness could exist with this and even a smidge of 1, 2, and 4). This component is conscious muscle operation. In truth all that exists here is the conscious desire to do something, since theoretically the non-conscious brain detects such desires and takes care of them where applicable. So it’s not really up to the desirer, which is inherent, but rather the non-conscious brain which either does or doesn’t move those muscles as instructed. Otherwise there would be paralysis.

So now that affect has been put at the first consciousness category, I believe that I’ve addressed your concern. We can of course build non-conscious robots, though in order to get on this particular hierarchy we’d need to build something with affect. At this point in time we’re unable to knowingly do so, so I’m not holding my breath.

Right. So far I see plenty of signs that a bit affect probably exists. I’d like to see these subjects put under what should be uncomfortable situations to see if they do tend to come out of their stupor to display non-reflexive behavior.

On affect, yes I define it essentially the same as consciousness. I don’t currently believe it’s ever useful to say that affect exists without consciousness, or even consciousness exists without affect. But this second condition is the more speculative one. If a visual image can exist, the theory is that a reduction in affect to nil would end it, somewhat like anesthesia would.

Anyway, yes electricity is not useful to define as computation, and even though you might say that I’m defining consciousness to drive consciousness. But it’s not exactly that. I’m defining affect/ consciousness to potentially drive a functional form of affect/ consciousness. These aren’t quite the same. I’m saying that if you take away affect/ consciousness, the motivation to drive this second form of computation evaporates. Akinetic mutism is but one of countless demonstrations of this. So my point is to make a distinction between what we refer to as functional consciousness, such as “That hurts so I’ll stop” and straight affect, which I consider to exist as consciousness itself. A visual image would exist as an example of consciousness, though I currently doubt it can exist under perfect apathy.

From way back as a youngster I’ve considered all of reality to exist by means of physical dynamics, which more reductively may be referred to as causal dynamics. And yes, I do consider softwarism to violate this premise as we’ve discussed, though I don’t mind going that way when others consider it valid. Note that if you believe the GWT position that affect exists as what sensory information feels like from the inside, then this would provide an affect account, just as softwarism does. My own model remains mute there so go ahead and use these in order to potentially gain a working level grasp of my model if you like. But this is what I seek, not your agreement. Once you’re able to gain a working level grasp of my model, and so able to tell me the sorts of things that my model predicts, that’s when your analysis should be most effective.

LikeLiked by 1 person

Eric,

That hierarchy was originally meant as a response to panpsychism, or other notions that usually take simple criteria for consciousness. But once the word “consciousness” is applied, we tend to project the full suite of our own capabilities on whatever we’re talking about.

By including reflexes, it allows me to point to what many of them say defines consciousness and then point out what’s still missing. It also allows for a discussion on what’s missing from systems like self driving cars or autonomous Mars rovers, which do build sensory models of their environment, but which are currently still used by a rules based engine.

In that sense, it’s meant to be a theory neutral description of capabilities. Your alternate hierarchy is heavily laden with your own theory. That’s fine if you want to do that, but it doesn’t give us the ability to talk about what’s missing in, say, plant, worm, or robot “consciousness”, or for that matter what’s missing in primary consciousness.

“From way back as a youngster I’ve considered all of reality to exist by means of physical dynamics, which more reductively may be referred to as causal dynamics. And yes, I do consider softwarism to violate this premise as we’ve discussed,”

I remain puzzled by this stance, since there’s absolutely nothing non-physical about software. Software is just hardware configured a certain way. It’s like saying that adjustments to the width of your wrench, selection of a drill bit, or choosing a blade on a Swiss army knife, somehow adds spookiness to the equipment. Is a house non-physical because it was built according to specifications?

What prediction(s) are you hoping I’ll be able to say your model makes?

LikeLiked by 1 person

Okay Mike, I can appreciate the diplomacy of granting panpsychists the right to sit at your consciousness table, and then explaining how they will at least reside at the bottom rung. But I do still think that I’m able to have effective discussions in the topic as well, though without granting them such a seat at all. Furthermore there is this “life based panpsychism” business. I’ll not grant IITers a seat at my consciousness table simply for diplomacy, though I still believe that I’m able to effectively discuss the issues with this incredibly popular fad.

Just because I grasp the nature of my own consciousness, doesn’t mean that I project that very same thing upon other creatures which I consider conscious/ sentient. I don’t consider dogs to grasp human language for example, and even though they surely do pick up the meaning of many of the terms that we use in front of them. The point is that there is something it is like to exist as a dog, as well as a frog, and I think probably a fish, and maybe even a fly. Most worms surely aren’t sentient, though I suspect that some are. There’s nothing anthropocentric about theorizing that when such a creature is put on a hook, that existence might feel horrible to it. It could be that some varieties have needed to exist under open enough environments to require bit of teleological function. And if that is the case, such a creature might have some conception of its past by means of a degraded re-firing of neurons — surely some basic memory might then be adaptive. And might a sentient creature in the mud have some conception of its future and so have incentive to want to set things up for it? Obviously not as the human does, but perhaps in some sense. So if there are any sentient worms, it’s conceivable to me that something more than just primary consciousness might exist in them.

The “softwarism” term is simply one that I consider a bit more relevant than the standard “computationalism” term, and it doesn’t mean that I consider there to be anything spooky about software. It’s roughly the idea that consciousness exists in the human, like software exists in the computer. One implication of this is that anything can be sentient as long as it processes the right information. Taken to an extremity this view holds that certain symbol laden sheets of paper which are processed with the right lookup table into other symbol laden sheets of paper, will create something which feels what we do when our thumbs get whacked. I do believe that information is processed when our thumbs get whacked, but my naturalism prevents me from believing that any materials which process this information will thus create this pain. Thus I wouldn’t say that it’s possible for thumb pain to exist by means of symbols on paper processed into other symbols on paper. To me that’s spooky.

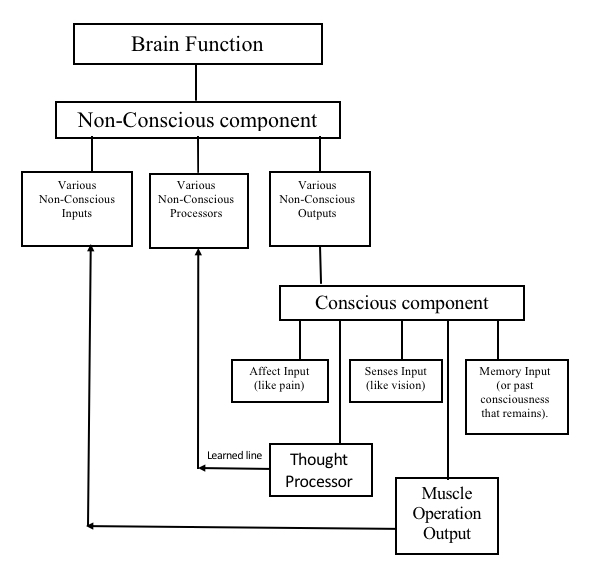

On predictions about my model, what I seek is for you and others to gain a working level grasp. For example, a student attending a physics lecture should not grasp the intimate details of a new idea at that point. It’s only while attempting to solve associated problems that the student should begin to grasp what the new idea both does and does not mean. So that’s what I seek. If you had a working level grasp of my ideas then you could effectively test them yourself and so bring in your own perspective. Here’s a simple diagram and some question to potentially solve in these efforts. (I haven’t gotten into some of this here, so don’t worry about skipping.):

Is any part of the brain conscious, and if so, which?

What are the three forms of input to my conception of consciousness?

What does the conscious processor “do”?

What is the only non thought output of consciousness?

How is it that conscious function gets relegated to non-conscious function?

Are my ideas moral, immoral, or amoral?

What do I consider “good”?

In a theoretical sense how would one calculate the welfare of existing as a person? Or as a society?

If something happens to be good for something, why might this also be considered repugnant?

Conscious entities are self interested products of what?

If we’re all self interested from moment to moment, then why would we ever make apparent sacrifices for our futures?

LikeLiked by 1 person

Eric,

On panpsychism, it’s not so much diplomacy as pedagogical value. But it’s also probably easier for me to let naturalistic panpsychism in than you, since I don’t necessarily consider it wrong, just unproductive. (Dualistic panpsychism, of the type Philip Goff and others are pushing, I do think is outright wrong.)

You say you don’t project, then go on to do a lot of projecting. 🙂 To some degree, we have no choice but to project. Consciousness as a topic wouldn’t even exist without that projection. And yet, when it comes to non-human systems (or even immature or impaired human ones), we have to be cautious and not let sentiment cloud our judgment. (As you frequently point out, we can’t let morality dictate scientific conclusions.) The only way I know to do that is to insist on evidence. And the only evidence to establish that, say, a hooked worm is in some type of agony, is by behavior indicating affect rather than just reflex.

On software and computationalism, what if we made a modification to Searle’s thought experiment? Rather than using pencil and paper, what if Searle did everything with organic chemicals, receiving the question with electrochemical signalling, working through it using such chemicals, and expressing an answer with those electrochemical signals? In other words, he’s doing all the work with the same materials that the nervous system uses? Does that make a difference? If so, why?

On predictions, okay here goes:

Is any part of the brain conscious, and if so, which?

No

What are the three forms of input to my conception of consciousness?

Affect, sense, memory (I did cheat by looking at your diagram)

What does the conscious processor “do”?

Imaginative simulations for novel decision making

What is the only non thought output of consciousness?

Muscle operation

How is it that conscious function gets relegated to non-conscious function?

The development of habits

Are my ideas moral, immoral, or amoral?

amoral

What do I consider “good”?

Usefulness

In a theoretical sense how would one calculate the welfare of existing as a person? Or as a society?

The total amount of good feeling minus bad feeling

If something happens to be good for something, why might this also be considered repugnant?

The goodness might not reflect traditional morality, such as being hooked up to a pleasure machine

Conscious entities are self interested products of what?

evolution?

If we’re all self interested from moment to moment, then why would we ever make apparent sacrifices for our futures?

Long range interests over short range ones

Do I pass?

LikeLiked by 1 person

Mike,

It seems to me that it could be at least as easy to say that something is not sentient when it is, as say it is sentient when it’s not. You seem heavily concerned about the bias of our sympathy. But don’t forget about the opposing bias, or how we shouldn’t want to feel blamable for what we want to do. It’s surely most convenient for us to imagine that lower forms of life are no more sentient than plants are. Regardless, in these sorts of discussions I don’t mind getting into my own model of consciousness, as well as my theory of why consciousness evolved.

On Searle using the same materials that the brain uses to produce something conscious, this should only work if the physics of affect happens to be respected. Of course we don’t know what that physics is, but that’s what would be needed for this sort of thing to even conceptually work (given naturalism, that is). Interestingly it should be incredibly more simple to to use these materials to produce the experience of thumb pain than to produce something that actually understands Chinese! While producing thumb pain should simply require affect dynamics, producing something that “understands Chinese”, should require a vast assortment of capabilities only known to exist in a human, and an educated one at that.

I do actually believe that in a conceptual sense brain information could be uploaded to a computer for non-sentient preservation. Furthermore I believe it could even be downloading to a machine which thus produces something that feels like it’s the original person. For this to occur however, such a machine would at least need to respect the physics of affect given the premise of naturalism, and the softwarism perspective does not.

On your prediction of how I’d answer the provided questions, you did great! Let’s review.

Correct. As I see it brains produce consciousness, or a fundamentally different variety of computer. The first functions on the basis of neurons, while the second functions on the basis of affect. So this perspective is a quite different from most.

Correct, and I was hoping that you’d know where you could get this information if it wasn’t initially in your accessible memory. Most models only address informational senses and affect this way, so I figured it might not be.

Yeah I’ll give you that, though technically I reduce this back to two technical components. First the conscious processor must interpret these inputs in order to grasp what’s coming in. For sense and affect inputs, the memory input helps with identification. I suppose that this incites many to classify memory as processing itself, which I don’t consider quite as productive.

Then secondly I consider this processor to construct scenarios about what to do given its affect motivation. Note that even the most basic conscious entity will need each of these components in at least some capacity in order to be productive.

Correct. I say “non thought” because technically thought can provide output, as in, “If this happens again, here’s what I’ll do about it…”. That would be an output which hasn’t been realized. But muscle operation is the only outward display of consciousness that I know of, and I consider the non-conscious brain to detect what the conscious entity wants to do, and so takes care of moving these muscles appropriately.

Correct. In my diagram I signify this potential by means of a “Learned Line”. Here the conscious processor passes off appropriate tasks over to the non-conscious processor. The vast majority of what we commonly consider conscious behavior, such as speaking, seem heavily dependent upon brain function, which is to say learned non-conscious function.

Yep.

Okay, first miss. I could have accepted it if you would have said “utility”, since that’s the term economist use for feeling good. Still I hate that term specifically given the standard “usefulness” connotation. But then you did get the next question right, so here you must have meant “usefulness” in terms of feeling good. So okay, accepted.

Right. It’s like a score that compiles over time. Furthermore it seems to me that the greatest pain that I could ever feel should be hundreds or thousands of times stronger that the greatest pleasure that I could ever feel. So even though a very happy life should be quite good to me, being tortured might quickly negate that good life when all taken together.

Actually what I was going for here is that conflicting interests often exist, and thus what can be good for one can be bad or horrible for others. Lying, cheating, and stealing might make a given person’s life feel much better than otherwise, and at far greater expense to others. Or I might decide to try the octopus dish at a restaurant, not knowing that the chef will torture this creature mercilessly for something that doesn’t really matter to me. Or there’s Derek Parfit’s Repugnant Conclusion, which I agree with, though reality is what it is anyway. A society of 100 trillion barely happy people might create more value than a society of 10 billion amazingly happy people. It is what it is.

Still your example does work as well. If people started getting electrodes put in their heads for “juicing” rather than worrying so much about standard life, many would find this escape repugnant, and even if the recipients were not hurting others and were thus having amazingly good lives. The realities of what’s ultimately good, according to me, can be very different from what we want to be good, and it’s our morality paradigm which seems to prevent science from straightening this business out.