Victor Lamme’s recurrent processing theory (RPT) remains on the short list of theories considered plausible by the consciousness science community. It’s something of a dark horse candidate, without the support of global workspace theory (GWT) or integrated information theory (IIT), but it gets more support among consciousness researchers than among general enthusiasts. The Michael Cohen study reminded me that I hadn’t really made an effort to understand RPT. I decided to rectify that this week.

Lamme put out an opinion paper in 2006 that laid out the basics, and a more detailed paper in 2010 (warning: paywall). But the basic idea is quickly summarized in the SEP article on the neuroscience of consciousness.

RPT posits that processing in sensory regions of the brain are sufficient for conscious experience. This is so, according to Lamme, even when that processing isn’t accessible for introspection or report.

Lamme points out that requiring report as evidence can be problematic. He cites the example of split brain patients. These are patients who’ve had their cerebral hemispheres separated to control severe epileptic seizures. After the procedure, they’re usually able to function normally. However careful experiments can show that the hemispheres no longer communicate with each other, and that the left hemisphere isn’t aware of sensory input that goes to the right hemisphere, and vice-verse.

Usually only the left hemisphere has language and can verbally report its experience. But Lamme asks, do we regard the right hemisphere as conscious? Most people do. (Although some scientists, such as Joseph LeDoux, do question whether the right hemisphere is actually conscious due to its lack of language.)

If we do regard the right hemisphere as having conscious experience, then Lamme argues, we should be open to the possibility that other parts of the brain may be as well. In particular, we should be open to it existing in any region where recurrent processing happens.

Communication in neural networks can be feedforward, or it can include feedback. Feedforward involves signals coming into the input layer and progressing up through the processing hierarchy one way, going toward the higher order regions. Feedback processing is in the other direction, higher regions responding with signals back down to the lower regions. This can lead to a resonance where feedforward signals cause feedback signals which cause new feedforward signals, etc, a loop, or recurrent pattern of signalling.

Lamme identifies four stages of sensory processing that can lead to the ability to report.

- The initial stimulus comes in and leads to superficial feedforward processing in the sensory region. There’s no guarantee the signal gets beyond this stage. Unattended and brief stimuli, for example, wouldn’t.

- The feedforward signal make it beyond the sensory region, sweeping throughout the cortex, reaching even the frontal regions. This processing is not conscious, but it can lead to unconscious priming.

- Superficial or local recurrent processing in the sensory regions. Higher order parts of these regions respond with feedback signalling and a recurrent process is established.

- Widespread recurrent processing throughout the cortex in relation to the stimulus. This leads to binding of related content and an overall focusing of cortical processes on the stimulus. This is equivalent to entering the workspace in GWT.

Lamme accepts that stage 4 is a state of consciousness. But what, he asks, makes it conscious? He considers that it can either be the location of the processing or the type of processing. But for the location, he points out that the initial feed forward sweep in stage 2 that reaches widely throughout the brain doesn’t produce conscious experience.

Therefore, it must be the type of processing, the recurrent processing that exists in stages 3 and 4. But then, why relegate consciousness only to stage 4? Stage 3 has the same type of processing as stage 4, just in a smaller scope. If recurrent processing is the necessary and sufficient condition for conscious experience, then that condition can exist in the sensory regions alone.

But what about recurrent processing, in and of itself, makes it conscious? Lamme’s answer is that synaptic plasticity is greatly enhanced in recurrent processing. In other words, we’re much more likely to remember something, to be changed by the sensory input, if it reaches a recurrent processing stage.

Lamme also argues from an IIT perspective, pointing out that IIT’s Φ (phi), the calculated quotient of consciousness, would be higher in a recurrent region than in one only doing feedforward processing. (IIT does see feedback as crucial, but I think this paper was written before later versions of IIT used the Φmax postulate to rule out talk of pieces of the system being conscious.)

Lamme points out that if recurrent processing leads to conscious experience, then that puts consciousness on strong ontological ground, and makes it easy to detect. Just look for recurrent processing. Indeed, a big part of Lamme’s argument is that we should stop letting introspection and report define our notions of consciousness and should adopt a neuroscience centered view, one that lets the neuroscience speak for itself rather than cramming it into preconceived psychological notions.

This is an interesting theory, and as usual, when explored in detail, it turns out to be more plausible than it initially sounded. But, it seems to me, it hinges on how lenient we’re prepared to be in defining consciousness. Lamme argues for a version of experience that we can’t introspect or know about, except through careful experiment. For a lot of people, this is simply discussion about the unconscious, or at most, the preconscious.

Lamme’s point is that we can remember this local recurrent processing, albeit briefly, therefore it was conscious. But this defines consciousness as simply the ability to remember something. Is that sufficient? This is a philosophical question rather than an empirical one.

In considering it, I think we should also bear in mind what’s absent. There’s no affective reaction. In other words, it doesn’t feel like anything to have this type of processing. That requires bringing in other regions of the brain which aren’t likely to be elicited until stage 4: the global workspace. (GWT does allow that it could be elicited through peripheral unconscious propagation, but it’s less likely and takes longer.)

It’s also arguable that considering the sensory regions alone outside of their role in the overall framework is artificial. Often the function of consciousness is described as enabling learning or planning. Ryota Kanai, in a blog post discussing his information generation theory of consciousness (which I highlighted a few weeks ago), argues that the function of consciousness is essentially imagination.

These functional descriptions, which often fit our intuitive grasp of what consciousness is about, require participation from the full cortex, in other words, Lamme’s stage 4. In this sense, it’s not the locations that matter, but what functionality those locations provide, something I think Lamme overlooks in his analysis.

Finally, similar to IIT’s Φ issue, I think tying consciousness only to recurrent processing risks labeling a lot of systems conscious that no one regards as conscious. For instance, it might require us to see an artificial recurrent neural network as having conscious experience.

But this theory highlights the point I made in the post on the Michael Cohen study, that there is no one finish line for consciousness. We might be able to talk about a finish line for short term iconic memory (which is largely what RPT is about), another for working memory, one for affective reactions and availability for longer term memory, and perhaps yet another for availability for report. Stage 4 may quickly enable all of these, but it seems possible for a signal to propagate along the edges and get to some of them. Whether it becomes conscious seems like something we can only determine retrospectively.

Unless of course I’m missing something? What do you think of RPT? Or of Lamme’s points about the problems of relying on introspection and self report? Should we just let the neuroscience speak for itself?

If we “let neuroscience speak for itself” it will remain silent on our psychological notions, but we want to know how (or if) the psychological notions fit into the scientific picture. I’d be more curious to know if affect is truly unaffected by everything that fails to make it to the level of report. I really doubt that. Isn’t there something called subliminal advertising? These items that make it into iconic memory but not report, aren’t they generated with a similar stimulus?

LikeLiked by 1 person

I’m with you on letting neuroscience speak for itself. I do think we should be open to the likelihood that psychological concepts may not map cleanly to neurological realities, but ignoring what we’ve learned from psychology seems unproductive.

On subliminal stimuli, it’s worth noting that those are very brief, under 50 ms. I’m not sure they really make it into iconic memory. They probably are strong enough to cause stage 1 and 2, the feedforward sweep, which can result in unconscious priming. On whether they cause affects, they can certainly cause underlying physiological reactions, which if strong and persistent enough could eventually trigger an affect, but it’s far from guaranteed. But the underlying changes can alter behavior in an unconscious manner. (Although the effect is reportedly far weaker than pop psychology suggests. For instance, it actually is useless for advertising.)

LikeLike

Mike, thanks for doing the leg work!

Sorry, but all I can do is put this into my understanding of things. This theory, like nearly all theories, appears mostly right and a little wrong. Here’s how it fits into my understanding:

The basic unit of Consciousness, the psychule, looks like this:

Input—>[unitracker]—>Output(symbolic representation)Input—>[mechanism]—>Output(functional value)

So this would be a single conscious-type process. If the functional output is a physical response with nothing else going on, you, Mike, would call this a reflex, and not enough for Consciousness. However, most philosophers talk about conscious “states”. When people talk about a “conscious state”, in my view they’re talking about a dynamic state where the psychule is happening over and over, i.e., recurring!

So you could set up a recurrent state that looks like this:

Input1—>[unitracker1]—>Output1–>[unitracker2]—>Input2–>[unitracker1]—>etc.

This would constitute a kind of local memory, but if nothing else happens with any output, there’s really no functional output, so most would say no Consciousness. Nevertheless you could squint and say the “memory” function is enough function to call this situation by itself Consciousness. That seems to be what Lamme is doing.

So what if something else happens with Output1 (besides also going to unitracker2), such as:

Input1–>[unitracker1]—>Output1–>[global workspace] —>OutputG1

OutputG1 goes to unitrackers throughout the globe, i.e., cortex. Whatever processes keep the unitracker1 process recurring will keep OutputG1 going out to the cortex recurrently, unless some other process (aka attention) prevents the output from affecting the global workspace.

It’s also possible that the Output1 of unitracker1 goes to other processes besides the global workspace, perhaps processes that control bodily movement, like processes for avoiding obstacles while walking, or driving. So what would happen if we take away the path that goes to the global workspace without damaging the other paths? If temporary, due to attention mechanisms, we get automatic driving. If permanent, due to physical damage, we get blindsight.

So it’s the OutputG1 that goes out to the cortex and is available to processes for reporting and recombining with other global outputs (OutputG1 + OutputG2) for imagination, etc.

So as you say, Mike, you can choose your own finish line, but they all start with the psychule.

*

LikeLiked by 1 person

Thanks James. And no worries about fitting it into your own understanding. It’s what we all do.

“Nevertheless you could squint and say the “memory” function is enough function to call this situation by itself Consciousness. That seems to be what Lamme is doing.”

It’s worth noting that the memory here would be short term potentiation, that is, short term changes in synapse strength. Very short term in the case of iconic memory. (A few seconds according to Lamme, less than a second according to Wikipedia (not sure who’s more up to date)).

“It’s also possible that the Output1 of unitracker1 goes to other processes besides the global workspace,”

I think the way to think about it is that Output1 goes to lots of different recipients. If enough of them respond, it can escalate that output into [global workspace]. The only difference between Output1 and OutputG1 is the number of recipients. But if only a few respond (because selective attention is currently boosting another signal, or because those other regions are already habituated to that signal already, etc), then it can lead to the habitual or reflexive actions you noted.

What I’ve been wondering lately is how widespread a signal can be if it never wins the workspace competition. Could it still make it into a regional working memory for instance? Sort of like what was described in the Cohen experiment.

“So as you say, Mike, you can choose your own finish line, but they all start with the psychule.”

Makes sense. But isn’t a psychule involved in any information processing? Or is it uniquely neural in nature? Wouldn’t unconscious processing involve them too? Or am I misunderstanding them?

LikeLike

I think the recursion by itself, without synapse changes, would constitute memory. Changes in synapses would constitute a separate kind of memory

I don’t think this is correct. Output1 will always go to all it’s possible recipients unless suppressed in transit. Think of it in terms of internet architecture. The output of unitracker1 (me) goes to a central workspace (WordPress computers?), and becomes available to other unitrackers (like you). The alternative (which you seem to be suggesting) is that my output goes directly over lines between me and each possible recipient. A few (“near by”?) recipients pay attention, and if they decide, tell other recipients to pay attention, and if enough are paying attention, it goes viral/global. But this requires that every unitracker is directly wired to every other unitracker. Seems unlikely, is all.

Well, in my paradigm of column = unitracker, it only takes one axon to go to the global workspace, and there are lots of other axons out of the column, including to other sub cortical regions (I assume).

“But isn’t a psychule involved in any information processing?”

Lots of definitional issues. What do you mean by information processing here? Also, just as choosing the finishing line is arbitrary, choosing the starting line is arbitrary. I could say the psychule is just Input—>[mechanism]—>Output. That gives panpsychism! Or I could say the psychule is the same, but the Output has a function. So, chemotaxis counts. Functionalism! Is that information processing? I chose what I chose above because that level is needed to explain qualia.

“Or is it uniquely neural in nature?”

All mechanisms are multiply realizable, so neurons not necessary.

“Wouldn’t unconscious processing involve them too?”

This is a perspective question. If a process is a Consciousness-type process, but is not accessible by a given system, it is “unconscious” relative to that system. So your conscious processes are unconscious relative to me. Likewise, some processes in you may be conscious relative to one or more systems in you, but unconscious to others. Most people associate their own consciousness with those processes about which the system can report. Thus the “autobiographical self”. That’s also why when you cut the corpus collosum, split the brain, conscious processes in one side become “unconscious” relative to the other side. They are still conscious processes relative to their respective sides.

*

LikeLike

“I think the recursion by itself, without synapse changes, would constitute memory. Changes in synapses would constitute a separate kind of memory”

I see what you’re thinking here, but I think the recurrence itself is best simply thought of as a loop. If nothing happens synaptically, then as soon as the stimulus ends, the pattern disappears, and it can’t be retrieved, even milliseconds later. On the other hand, if synapses have strengthened, even fleetingly, then reactivating part of the pattern might make some semblance of the stimulated pattern reappear.

On the workspace, you seem to be seeing just two possibilities, a centralized storage area, and a completely egalitarian meme forwarding arrangement. I think it’s more of a hybrid. A region transmits information to the regions it’s connected to. Some of those regions are excited by that signal and establish recurrent connections, while also forwarding it on to other regions which may establish yet more binding connections.

If certain highly connected regions become excited (what researchers typically mean when they talk about “where” the workspace is), they propagate that signal, or compatible signals, in favor of others. If the signal and compatible signals come to dominate the network, it becomes available for being indexed for long term storage, it may cause affects, is available for report, etc. In other words, it enters consciousness.

But it also need to be emphasized that all the major cortical regions are heavily interconnected with each other.

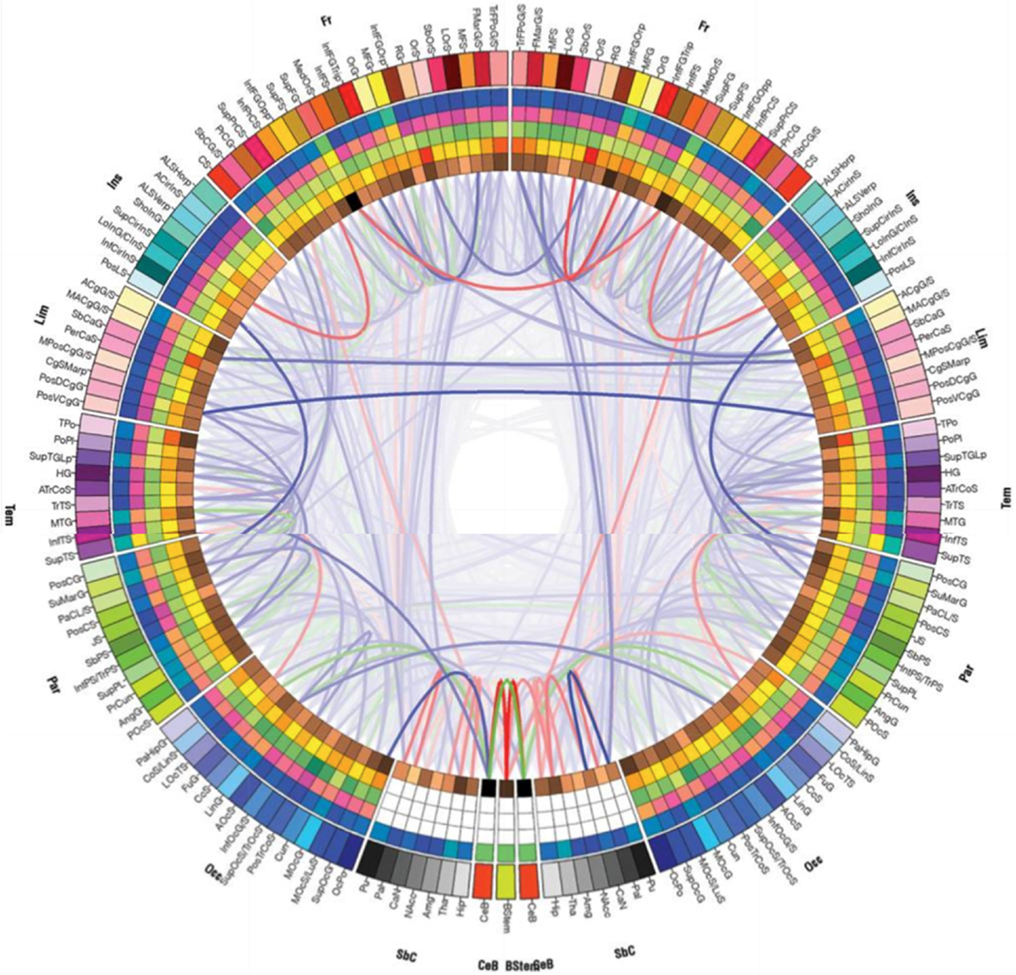

https://www.frontiersin.org/articles/10.3389/fpsyg.2013.00200/full

On my question regarding unconscious processing, good answer, and in truth that was a bad question on my part. There is no processing that is, in and of itself, conscious or unconscious, just processing that has either had widespread causal effects, or hasn’t, which is more about the response of the overall system than the processing itself. (Although there is some processing that is definitely unconscious, just because of brute connectivity limitations, such as the autonomous stuff in the lower brainstem.)

LikeLike

Exactly what would you say gets “forwarded to other regions”? Suppose we have regions A, B, C, and D. A and B can transmit to C. How many different signals can A transmit to C? How many other regions can transmit to C? C “forwards” either A or B or C (or in some combination) to D. How many possibly different signals can C forward to D? How many other C’s are forwarding that many signals to D? Seems like D has to differentiate just a whole bunch of (analog-ish) signals, pretty much without fail. And does this issue go in the other direction from D to C? Do you avoid loops, like C—>D—>E—>C ? Seems like a hub architecture would work so much better.

*

LikeLike

The amount of information that can be transmitted between regions really comes down to the number and thickness of the axons running between them. And I guess their firing rate. (Pyramidal neurons tend to be thick and high performing. They’re the myelinated ones.) And it’s not that these regions are getting a copy of the content in the source region. They’re receiving signals that cause changes in their state. It’s about the original content having causal effects throughout the system.

Remember that recurrent processing is the goal, so looping is going to happen. It’s a feature. But to your question, the system does sometimes not work right, and gets stuck in an undifferentiated feedback loop, which we commonly refer to as a seizure. But in most cases, the loop firing quickly fades unless there is ongoing stimuli.

On the hub architecture, it might well work better for a computer system with a data bus and random access memory. But have you considered how well it would work in a physical neural network with the content and instructions all tangled together? Think about how much plasticity that region would need to have.

LikeLike

[back to the reply zone]

Um, thickness of the neuron doesn’t enter into the amount of information a signal carries. Myelination mostly affects the speed at which the signal is propagated along the axon. The paradigm signal for a neuron is one bit, on or off. If you use firing rate, I think neurons can reliably distinguish up to about 10 possible rates, and when neuroscientists discuss firing rates they usually talk in terms of a handful of bands with Greek letter names.

If the regions are not getting a copy of the information, in what sense is the information globally broadcast? In what sense is a prefrontal region getting the information “blue ball 2 feet in front of me” from the visual region?

[I only care about the looping above if there is a copy of the information being forwarded, but if there is no such copy, again, how is the information globally broadcast?]

Finally, Semantic Pointer Architecture seems to work just fine as a hub.

*

LikeLike

The diameter of an axon affects the conduction velocity. Higher bandwidth means more data. (Usually.)

I’m not I understand, “neurons can reliably distinguish up to about 10 possible rates”. Are you saying downstream neurons can only be excited by one of those rates? Or that neurons overall can only fire at those rates? Either way, I’d be very interested in the source material for that.

“If the regions are not getting a copy of the information, in what sense is the information globally broadcast?”

Remember that none of these regions are conscious, so none of them by themselves have a comprehensive understanding of the information. They receive signaling relevant to their function. So the hippocampus receives signalling that may or may not cause it to flag the content for later rehearsal for long term storage.

In terms of “globally broadcast”, the word “broadcast” gets something like the basic idea across, but it’s one of those terms that makes progress but requires cleanup afterward. A better way to think about it is global causal effects. Carruthers dislikes it, but I still think Dennett’s “fame in the brain” analogy works well too.

“Finally, Semantic Pointer Architecture seems to work just fine as a hub.”

Based on what I understand about them (which remains hazy), they also work in a distributed fashion, as what each region holds about the original source content. A principal of neural architecture appears to be to store data where it’s acted upon. In that sense, it makes more sense for them to be distributed throughout the brain.

LikeLike

This and other blogs raise a broader question for me of what we seek to do in putting forwarding named theories of consciousness then evaluating them against some mix of introspection, neuroscience and philosophy. Do we know what question we are trying to answer, and what a good answer would look like?

Compare it with seeking an understanding of water. Would we feel we understood water if we knew about two hydrogens and one oxygen; or predicting its flow via the Navier Stokes equation; or accounting for wetness; or knowing what it is to go surfing? These all seem to require entirely different types of understanding.

Perhaps this is about being clear what the question is that we are seeking to answer?

LikeLiked by 1 person

That is the difficulty. Baars points out that our definitions have to change as we learn more, and that this is a normal part of science. https://bernardbaars.com/2020/01/01/observational-definitions-of-consciousness/

With all the definitional issues, the attitude of most cognitive neuroscientists appears to be not to take on consciousness directly, but to learn as much about cognition as possible and hope it eventually enables progress in theories and philosophy. If you read a book on cognitive neuroscience, you’ll likely see very little about consciousness per se, but a lot about capabilities normally considered part of it.

That said, most of us don’t keep up with all the minutia in cognitive neuroscience. I learn a lot about it by reading these various theories. So for me, they all add to the mix.

Ultimately, I doubt there will ever be one theory that fully explains consciousness. Just like there was never any one explanation for biological vitalism, but a wide variety of organic chemistry, I suspect the same will be true for consciousness.

LikeLike

I am fascinated by the fact that if an athlete wants to perform at a very high level, he needs to control his conscious thinking to keep it out of the performance. The performance is driven by unconscious functions. And the comment that the right hemisphere may not be conscious because it lacks language is interesting, but … so what? Our consciousness seems to float along above our lives thinking about all sorts of things, which unconsciously we drive our cars, swing golf clubs, and type on a keyboard. So, which is the more important?

We have just established that unconscious functions use the same areas of the brain as conscious ones do. That is the first concrete fact about our unconscious functions that I have seen. Do we need to define/explain consciousness to study all of the unconscious competencies we have?

LikeLiked by 1 person

We need consciousness to learn (or maybe it is learning as I have argued) but the ultimate goal is to be able to act with as little consciousness as is required. To be automatic is faster and frees consciousness for (learning) the things that are not currently automated or dealing with uncertainty.

I quoted Solms in my last post on the topic:

“It appears that consciousness in cognition is a temporary measure: a compromise. But with reality being what it is—always uncertain and unpredictable, always full of surprises— there is little risk that we shall in our lifetimes actually reach the zombie-like state of Nirvana that we now learn, to our surprise, is what the ego aspires to.”

LikeLiked by 1 person

That kind of reminds me of the sea squirt, which eats its own brain once it doesn’t need it anymore. (Not that anyone thinks they’re conscious.)

But it does line up with Kanai’s argument that the function of consciousness is imagination, or planning, even if only planning for the next few seconds.

LikeLiked by 1 person

Not really. Cognitive neuroscientists and psychologists have been studying unconscious competencies for decades.

I think an activity becomes unconscious when most of the specialist processes in the brain become habituated to the stimulus that triggers it, so that only the specialist tasks needed now react to it. If an athlete or anyone else does get their consciousness involved, such as by having affective anxiety drive it into the workspaces, then the majority of specialist processes which have nothing productive to add become involved, and compromise the motor output. Translation: they choke.

LikeLike

Mike,

A point of clarification. Several times in this post you spoke of various regions of the brain potentially “having” consciousness. I wonder if you’re averse to instead saying that they potentially “produce” consciousness? I personally consider no areas of my brain whatsoever to exist as my phenomenal experience, though I’m quite sure that various areas of it do produce what I experience. Similarly I don’t say that any light bulb exists as light, but rather can potentially produce it. And note that while this particular example does get into EM fields, brains could potentially still produce consciousness by means of information alone if that’s your conviction. If you believe that certain parts of your brain literally “are” conscious however, then that would be something to get into. And if not then you may consider me a bit anal-retentive for mentioning this. But in speculative matters like this one, I do consider precise language crucial.

LikeLiked by 1 person

Eric,

I didn’t purposefully avoid that language, but it really reflects your understanding of consciousness rather than mine. I’m sure if you delve into the archives, you’ll find occasions of me using that kind of language, and it’s possible you’ll catch me using it casually in the future, but only in a metaphorical sense.

I don’t think the brain produces consciousness in any sense of it then existing aside and apart from the brain. I can’t see any reason to think consciousness is a glow, field, radiation, ghost, or anything like that. I see it as one of the things the brain does, not something it produces. But I don’t think it can be localized to any one region, unless you want to refer to the entire thalamo-cortical system as a region.

I suppose you could argue it produces the contents of consciousness. But to me that’s like saying Windows produces its internal data structures. I think it’s more productive to think of that as the product of components in the system, intermediate states used in its processing.

So yeah, different understandings of consciousness, and my language reflects mine.

LikeLiked by 1 person

Mike,

You and I believe that brains do not produce consciousness in any manner apart from brain itself, of course, just as light bulbs and electricity do not produce light in any manner apart from what they are. But if you’d rather say that these structures “do” these things rather than “produce” them, well I can go with that. As long as you aren’t saying, as suggested, that any part of the brain actually exists as phenomenal experience! That would be way too strange.

In truth I wasn’t attacking the idea that phenomenal experience exists as information itself. Yes that does seem funky to me, though it’s a paradigm that I can go along with for now. It might be that when a given machine processes certain information, it “does” phenomenal experience (or produces something that feels it). My own brain architecture works fine under such a theory. And it seems to me that even if GWT or RPT happen to be effective models of how phenomenal experience become created, this might be through associated EM fields rather than information alone. Of course in the end we’ll need to let the evidence decide this matter for us.

LikeLiked by 1 person

Eric,

“As long as you aren’t saying, as suggested, that any part of the brain actually exists as phenomenal experience! That would be way too strange.”

That’s basically RPT’s proposition, that sensory regions can have experience. It’s not as obviously false as I thought it would be. Lamme does have a logic to his thinking, logic that’s worth understanding, because it does get at what might be an important prerequisite of consciousness: recurrent processing. I just don’t agree with him that it’s the only prerequisite. And his stage 4 is a nice alternate description of GWT.

“Of course in the end we’ll need to let the evidence decide this matter for us.”

In the end, that’s the final arbiter. The problem is that we don’t get evidence for something not existing. We only fail to find such evidence. We have to use parsimony to cull such theories. Of course, that’s always a matter of judgment, leaving it a space for cherished theories with little to no empirical support.

LikeLiked by 1 person

Mike,

I’m not saying that recurrent processing and/or GWT are wrong (though I consider their product to not be “brain” itself, but rather a potential product of what it does). Maybe one or both help create something with phenomenal experience. And maybe even in conjunction with EM fields. Evidence will be needed.

Right, but no one is suggesting that phenomenal experience doesn’t exist. Or that EM fields don’t exist. Or even that processed information doesn’t exist. It’s simply speculative today what it is that creates phenomenal experience. And if professionals in general believe that some manifestation of information itself is what makes this happen, well I can go with that for now. Rene Descartes wouldn’t have gotten far under the Catholic Church as a naturalist. At least the software to consciousness paradigm is one that I’m able to play ball with.

LikeLiked by 1 person

Eric,

Some people do suggest that phenomenal consciousness doesn’t exist, but they spend a lot of time explaining their view. I think it exists but isn’t anything non-physical, or has anything a machine couldn’t have, at least in principle.

“It’s simply speculative today what it is that creates phenomenal experience.”

Not all speculation is equivalent. Each assumption, or postulate, is another opportunity to be wrong. So theories with fewer assumptions have a higher chance of being right than theories with more assumptions. It’s why I favor theories that hew closely to cognitive neuroscience data. To me, the more theories posit mechanisms no one has detected yet, the more assumptions they’re making.

“And if professionals in general believe that some manifestation of information itself is what makes this happen, well I can go with that for now.”

It depends on which professionals you talk with. Philosophers appear to be all over the map. Most neuroscientists are in the information camp, but even they have a minority who roar at the winds of computation.

LikeLiked by 1 person

I meant sensible people Mike. I don’t think I could consider anyone with that position sensible, or at least not in that regard. Of course you and I believe that phenomenal experience functions by means of causal dynamics of this world. “Life” will have no monopoly on such physical processes, and so it must be possible for technological machines to exist such that phenomena is experienced.

I also appreciate parsimony where it can be found. Effective reductions help us understand things. But in the natural world a given idea might remain too simple to effectively describe how something transpires. If you like I could get into why it is that information processing alone shouldn’t be sufficient to create phenomenal experience. Or we could skip that and presume that information itself is nevertheless sufficient, and so discuss how GWT and/or RPT might be useful ideas on that front.

For example you once proposed to me that the global workspace might, “in some emergent information architecture sense”, create a non-brain sentient entity, or consciousness as I see it. If so then it could be that the great focus in academia upon what’s known as “access consciousness”, might be hindering effective modeling. Instead all consciousness would be phenomenal (or affective), though some of it would tend to provide information to an evolved entity as well. Here I’ve reduced the “access” idea to something more basic — an element of phenomena/ affect. And if GWT sees phenomena as inherent to access anyway, reducing access to phenomena might help improve the theory.

LikeLiked by 1 person

Eric,

“ I don’t think I could consider anyone with that position sensible, or at least not in that regard. ”

Hmmm. Well, I should note that while I’m not an illusionist, my primary difference with them is terminological rather than ontological. What they’re generally saying doesn’t exist is the non-physical version of phenomenal consciousness, or some version above and beyond the operations of the brain. I agree with that. What I disagree with is their failure to be explicit, to not admit that a more deflated pre-theoretical version of it does exist. (In their defense, they often do try to clarify that, but as a follow up, when people have usually stopped listening.)

“If you like I could get into why it is that information processing alone shouldn’t be sufficient to create phenomenal experience.”

You’ve been over the Searle type arguments that appeal to you many times: Chinese room, computation requires interpretation, etc, and I’ve given the standard computationalist answers to them. Are there others we haven’t gone into yet?

“For example you once proposed to me that the global workspace might, “in some emergent information architecture sense”, create a non-brain sentient entity, or consciousness as I see it.”

I should clarify that I was talking in terms of weak, or epistemic emergence rather than any kind of strong, or ontological emergence. The “non-brain” phrase makes me wonder if we’re actually on the same page. I do think consciousness is emergent from brain operations, and GWT is a plausible framework for how that emergence happens, but that emergence is in the same sense that temperature is emergent from particle kinetics, in that it is what it’s emergent from. For me, we’re just talking at a different level of organization from that of neurons, not something the brain produces and then exists in addition to it.

LikeLiked by 1 person

Mike,

I didn’t realize that you were merely referring to illusionists supposedly believing that phenomenal experience doesn’t exist. As I understand it they don’t exactly believe that (as your reply suggests), but rather use a title which suggests this merely in order to claim that phenomenal experience exists differently than it’s perceive it to exist. I’ve lately been bitching them out here, not exactly because they believe stupid things, but rather because some of them are so skilled that they can use accepted ideas to fool educated people into believing that they’re thus extra insightful. As I’ve said, apparently they can be tricky enough to “sell ice to indigenous people of the far north”.

On computationalism, or better yet softwarism, or better yet informationalism, no I haven’t thought up any new ways to explain that this is actually a supernaturalist position. If you saw this as I do then of course you’d change your mind about it, though perhaps some day you will. James just brought this up so I’ll try my luck with him.

On you speaking of epistemic rather than ontological emergence, well of course. I was too. We’re monists. This is to say that we believe everything is one kind of stuff that functions causally, though it’s still useful to define certain elements of reality in ways that are different from others. When I say that my brain functions as a vast non-conscious computer that creates a tiny conscious computer, this is an epistemic position. In the end there aren’t really any “computers” here, but rather just a bunch of interconnected causal events.

Anyway my point is that even if phenomenal experience does emerge from nothing more than information, or beyond the substrate which conveys it, this doesn’t upset my own psychology based models. Furthermore it seems to me that the popular notion of “access consciousness” hinders mental and behavioral sciences today in general. I propose a different perspective.

LikeLiked by 1 person

Eric,

On the illusionists, I don’t know that they could sell ice to the Inuit. They don’t seem to be making much headway in selling their ideas. That’s my chief beef with them, that the terminology isn’t really working.

“If you saw this as I do then of course you’d change your mind about it, though perhaps some day you will.”

And vice-versa. Honestly, at this point, to change my mind, so much neuroscience would have to turn out wrong that it’s hard to see it happening. What might be more plausible is finding out that the way the nervous system processes information is so tied to its microstructure that its functionality can’t be reproduced except in a very similar type of substrate. But given AI successes so far, that seems dubious.

“When I say that my brain functions as a vast non-conscious computer that creates a tiny conscious computer, this is an epistemic position.”

Good to hear.

“Furthermore it seems to me that the popular notion of “access consciousness” hinders mental and behavioral sciences today in general.”

What in particular about it do you think is hindering things?

LikeLiked by 1 person

Mike,

Is the illusionist position anything more than the quite accepted Kantian position that our phenomena is different from noumena? Are they saying anything controversial? I recall listening to Keith Frankish on Rationally Speaking recently, and though he claimed to be in some kind of minority which is able to provide profound insights, I didn’t perceive him to say anything controversial. Well… beyond the title that his movement has taken itself. So my beef with them is essentially what you’ve mentioned. Furthermore I think it’s the problems associated with philosophy and our soft sciences in general which permits a person to take such a standard position, and then use fancy arguments to suggest that it’s not actually accepted in order to become more distinguished.

So lots of neuroscience demands that the information alone of neuron firing causes phenomenal experience, and thus could occur by means of any substrate at all which correctly processes such information? Can you provide any examples? My current position is not based upon any neuroscience at all, but rather my understanding that no causal dynamics whatsoever exist independently of associated substrate.

My problem with the “access consciousness” term is that I think it adds an unneeded element in itself that hinders effective reductions here. I believe that my more parsimonious model (which is founded upon phenomenal experience alone, or affect), is able to present far more effective brain architecture. To potentially demonstrate this, if you like you could propose some scenarios in the form of “How does your model address [this]?L

LikeLiked by 1 person

Eric,

“Are they saying anything controversial?”

Given all the dualists, panpsychists, and idealists running around in philosophy justifying their stance on the hard problem, yes, I do think the illusionists are saying something controversial. It may not be all that controversial among many scientists, although like for me, the terminology often is, and many of them are emergentists, but large swathes of the philosophy of mind aren’t there yet.

“So lots of neuroscience demands that the information alone of neuron firing causes phenomenal experience,”

Yes. The sources are reputable neuroscience books: (Examples: Neuroscience for Dummies, Neuroscience: Exploring the Brain by Mark Baar (the older edition is affordable), or if you really want to get technical: Principles of Neural Design, Principles of Neural Information Theory). That’s not to say that the neural processing isn’t heavily interactive with the body and environment, but neural activity is the main action.

“and thus could occur by means of any substrate at all which correctly processes such information?”

As I noted above, there remains space for the information processing to require the biological neural substrate. Given past successes, I think this is unlikely, but it can’t be ruled out yet. Maybe we’ll eventually hit some wall that prevents further progress, but there’s no sign of it yet.

“Can you provide any examples?”

I can’t provide examples for the absence of something. The onus is on someone who claims that something non-informational is happening to provide examples. I’ve actively sought such examples from people making those claims over the years. When provided, they always turn out to still just be information processing. For example, Panksepp often cited brainstem activity as non-computational, but his definition of “computation” ended up amounting to what happens in the cortex, which is circular, and everything I’ve read about brainstem activity implies that it’s completely information based.

“To potentially demonstrate this, if you like you could propose some scenarios in the form of “How does your model address [this]?L”

An important tenet in cognitive theories is that access is phenomenality, and that our ability to reduce access to its mechanisms is a reduction of phenomenality. What’s your alternative to reduce phenomenality?

LikeLiked by 1 person

Mike,

Well okay, if Dennett and Frankish are simply trying to be good naturalists then I can at least try not to be so critical of them. If “illusionism” is merely suppose to counter funky notions, maybe it’s okay. Maybe it’s not just another joke in the field.

My own way to fight dualism is to advocate the creation of a respected community of professionals with accepted principles of metaphysics, epistemology, and axiology from which to better found the institution of science. If this proposed community had my single principle of metaphysics then there wouldn’t be any need to assess dualistic notions. It reads, “To the extent that causality fails, nothing exists to discover”. In that case dualists would reside over “quasi-science”, while naturalists would reside over “science”. So whenever someone would try to bring dualistic notions over to science, the response would be “Sorry mate, but you’ll have to take that over to quasi-science.” Problem solved!

Well if that’s the case then we should expect reduced arguments to this effect — not just “If you learn neuroscience, then you’ll also believe that phenomenal experience reduces back to anything that provides the proper information”. And I think you’ve mentioned that some neuroscientists strongly oppose this idea.

I’d loved to see the responses of neuroscientists to my non-ambiguous thought experiment. What percentage would say that brain information on sheets of paper that’s properly processed into other brain information on sheets of paper, should be sufficient to create an entity that feels what we do when our thumbs get whacked?

But here you’ve wrongly colored my position. I do not believe that biological processes are required for phenomenal experience to exist — biology reduces back to physics. Get the physics right in a given machine, and I believe that phenomenal experience is mandated to result.

But I also haven’t claimed that anything non-informational is happening. Notice that in my reply to James below I defined information as something which is crucial to the existence of computation. Here the “information” term refers to a relatively low energy type of input that a machine might process in an assortment of ways. I theorize the world’s first information processor as genetic material, with central organism processors second, the conscious form of function sometimes produced by a brain is considered third, and our technological computers last. None of these machines function without information, though associated physics is required as well.

I reduce this stuff to a punishment/ reward dynamic — all that is good/ bad for anything that exists. It’s essentially the fuel which drives functional forms of consciousness, somewhat like the way electricity drives the computers that we build. Furthermore the naturalist will mandate that there be physics based mechanisms which cause it, as surely transpires in my own brain.

I don’t believe that I’m asking you to give up any solid elements of neuroscience, but simply suggesting that you add one extra step in order to kill the dubious notion that phenomenal experience transpires by means of information alone. In order to have this effect, naturalism mandates that such information animate physics based mechanisms.

LikeLiked by 1 person

Eric,

“It reads, “To the extent that causality fails, nothing exists to discover”. In that case dualists would reside over “quasi-science”, while naturalists would reside over “science”.”

Here I think is a difference between us. You seem to see naturalism trumping science, to the extent that you’ll constrain science to fit in it. I don’t. If someone did show up with a posteriori evidence of dualism, I would accept that we would have to deal with it. Naturally I’d be suspicious of the evidence and insist that it be reproduced or otherwise verified, but if it was, I would be prepared to modify whatever a priori principles I thought about the world as necessary to understand what was happening.

“Here the “information” term refers to a relatively low energy type of input that a machine might process in an assortment of ways.”

It’s interesting you describe it that way. One description I sometimes use as a mark of a system that is primarily engaged in information processing is the ratio of its causal differentiation to the energy involved. Computational systems typically have a very high causal differentiation to a low energy level. In that sense, the nervous system operates at a very low energy level. Action potentials are typically measure in millivolts, protein circuits operate near the thermodynamic limit, and the entire brain reportedly operates on 12 watts. The brain seems to pass your criteria.

“But I also haven’t claimed that anything non-informational is happening.”

“None of these machines function without information, though associated physics is required as well.”

So, given that these two sentences seem contradictory, I have to admit I’m not sure anymore exactly what you’re claiming with the last part of the second one.

“I don’t believe that I’m asking you to give up any solid elements of neuroscience, but simply suggesting that you add one extra step in order to kill the dubious notion that phenomenal experience transpires by means of information alone. ”

You say it’s a dubious notion. Why? What about it makes it dubious? I understand your strong dislike of it, but that alone isn’t sufficient. What evidence or logic can you muster for the conclusion that something else is necessary? What can you tell me so that accepting it wouldn’t be a violation of Occam’s razor?

LikeLiked by 1 person

Mike,

Yes I will constrain the humanly fabricated “science” term to the tenets of perfect causality. But note that I’m also able to admit that perfect causality may be wrong in the end. I don’t consider what’s true in the end to determine how it’s most effective to define the “science” term. So without causality science can be wrong while quasi-science can be right. But here’s the kicker. It seems to me that it’s only under the dynamics of causality/ science that it’s possible for anything to ever become “figured out”. I don’t differentiate science from faith at causality for the reason that it’s true, but rather because figuring things out becomes obsolete to the the extent that causality fails — a causal path is quite mandated here. Without this particular bit of metaphysics, it seems to me that the institution of science today is not yet founded as well as it might be.

Actually it seems to me that I got this “low energy input” parameter from you. Thanks!

Hmm…. I see no contradiction here. Perhaps too many double negatives confuse things or something like that? But here’s an example that should be plain. The processor of a computer with a screen hooked up to it can make that screen’s pixels light up in associated ways. Even if the computer isn’t hooked up to that screen however, it seems to me that this information processor will still do the same processing, and even though those screen images will not be produced.

So consider the output of a computer screen like the output of a brain that produces phenomenal experience. The computer seems to animate a screen like a brain animates phenomenal experience producing mechanisms. Here it will not be “information” that causes phenomenal experience itself (just as a screen less computer won’t cause screen images), but rather the way that this information animates associated mechanisms.

On the notion that information in itself creates phenomenal experience without associated mechanisms to animate, I only say that this is “dubious” because all other computational output seem to have mechanisms that produce them. I presume that you’ve not yet provided me with the example of a second which exists this way, because you can’t think of one. Furthermore here we don’t need to assert the the truth of various ridiculous thought experiment assertions.

LikeLiked by 1 person

Eric,

“So consider the output of a computer screen like the output of a brain that produces phenomenal experience.”

This gets us back to the original contention in our initial exchange above. The idea that the brain outputs phenomenal experience as though it’s something that then exists separate and apart from the brain, or its neural processing, is your conception of consciousness, not mine. In my conception, phenomenal states are merely intermediate processing states. It’s only an “output” from one part of the system to other parts. That kind of mechanism exists in the device you’re using to read this in the form of interprocess communication (although it lacks the competitive nature of the brain’s version).

“On the notion that information in itself creates phenomenal experience without associated mechanisms to animate, I only say that this is “dubious” because all other computational output seem to have mechanisms that produce them. I presume that you’ve not yet provided me with the example of a second which exists this way, because you can’t think of one.”

As noted above, I haven’t responded because it’s orthogonal to the idea you’re attacking. It’s only relevant to your theory of consciousness. You first have to establish that these non-informational “associated mechanisms” exist.

I’ve given you an option before on this: the physiological interoceptive loop. It’s the closest thing I can think of that matches what you’re positing, but you didn’t seem interested. It’s what the embodied cognition folks like to cite as their objection to computationalism. Of course, it’s not really an obstacle to computationalism, since there can be a robot or virtual body to fulfill similar functions.

Something else to consider. If the brain is producing phenomenal experience, who or what is it producing it for? Who is the audience? What is its nature? How does it function? Is it itself conscious? If so, then what has actually been solved?

LikeLiked by 1 person

Mike,

Well okay, I guess we can go that way as well. Here phenomenal experience, like the pains and images and so on that we have, reflect intermediate neural processing states. Does this contingency permit them to exist by means of any mechanism at all that properly processes associated information? We know of all sorts of things which exist by means of specific mechanisms. Perhaps the entire universe functions this way. But beyond the proposal that phenomenal experience depends upon information rather than any specific mechanisms which display this information, what would be a second intra machine dynamic that exists this way? If defining phenomenal experience as such overcomes the need for unique mechanical properties, then are there any already accepted examples?

I’m not sure that I do. It’s quite common in science to explain various observations with hypothesized mechanisms, such as black holes, electrons, and so on. And sometimes we then find so much evidence for their existence by means of the phenomena that they seek to explain, that these hypothetical entities become extremely well accepted. This is the sort of thing that I’m proposing now. And note that you’re also taking this road by proposing that phenomenal experience exists by means of information independent of any specific substrate conveyance. Either way, we’ll need evidence.

I wouldn’t call it a “Who” but rather a “What”. As I see it this promotes survival by adding a teleological component, since in more open environments, base algorithms seem insufficient. This is displayed by how our robots commonly need help negotiating such environments.

I don’t consider there to be any gods or anything like that. If you perceive your own existence, as well as perceive that I affect your existence, then I suppose that you could be my audience in some sense. And likewise in reverse, on and on for whatever perceives its own existence in an advanced enough way to feel “observed”.

Phenomenal experience? I consider this to be all that constitutes good/bad existence for anything.

I’ve developed a pretty extensive psychology based model about that, and discuss it here often enough.

Yes I do consider it helpful to define what experiences phenomena to be “conscious”. This is to say that anything which feels good/bad is conscious, and anything that doesn’t, isn’t.

I don’t claim to have “solved” anything here. But it seems to me that the institution of science does remain in need of effective reductions regarding phenomenal experience, the dynamics of human function, and so on. I provide many such reductions that seem effective, and regardless of how it is that phenomenal experience exists, or the topic of our current discussion.

LikeLiked by 1 person

Eric,

“ what would be a second intra machine dynamic that exists this way?”

That exists pervasively in computing technology. At the hardware level, there’s communication between the processor, memory chips, storage devices, video circuitry, network card, and all kinds of other things. This is going on in whatever device you’re using to read this. And at the software level, we have all kinds of interprocess communication, for various apps to communicate with each other, as well as with the operating system.

Of course, we know the brain is heavily interconnected. So there’s little doubt that kind of communication is going on. We can see it in the way various regions begin to synchronize their oscillations, indicating that they’re working together. Saying that consciousness makes use of that communication seems pretty uncontroversial.

“It’s quite common in science to explain various observations with hypothesized mechanisms, such as black holes, electrons, and so on.”

Right, but if you’re going to claim similarities with these things, then you should present evidence similar to what was presented to establish them. For example, black holes were worked out mathematically, but no one seriously thought they might exist until astronomers started observing regions with gravitational effects with no light producing body, such as Sagittarius A* (the center of the Milky Way galaxy).

In your case, I’d settle for a solid logical chain of reasoning.

“Yes I do consider it helpful to define what experiences phenomena to be “conscious”. ”

“I don’t claim to have “solved” anything here. ”

This, I think, brings us to a point we often reach. You mention reductions, but I’m looking for a solution that reduces consciousness to non-conscious functionality. GWT, and IIT for all its faults, do make that attempt. My feedback for your model is that it needs something along these lines. You make a start with the discussion of punishment and reward, but don’t provide enough detail to reduce feeling to non-feeling.

LikeLiked by 1 person

Mike,

Both computers and brains are set up to function by passing information around inside them in various ways. I don’t know that anyone disputes this. I’m certainly not saying that there’s anything supernatural about parts of a computer that are designed to work together doing so, or parts of a brain that evolved as such. The problem is when we propose that phenomenal experience, or what I consider useful to define as consciousness itself, transpires by means of information propagated through any medium whatsoever. That’s where things get fishy. What else transpires by means of any medium at all? If nothing does then we should expect that brain information instead animates certain mechanisms in order to produce phenomenal experience.

Consider why I believe that phenomenal experience producing mechanisms must exist. Firstly it’s known quite well that phenomenal experience itself exists, and regardless of how some philosophers might propose otherwise (as denoted for example by the ploy that Eric Schwitzgebel calls “inflate and explode”). If it’s known to exist (or a start that black holes and electrons didn’t have), then the question turns to how it exists? I only submit that information in the brain must be animating associated mechanisms, because that’s the way computers work in general. Screen images cannot exist without a screen for its information to animate. Conversely saying that phenomenal experience exists as information independent of what medium conveys it, seems to depend upon magic. What could be more magical than a position which holds that brain information on paper which is properly processed into other brain information on paper, creates phenomenal experience? Is this not a solid logical chain of reasoning?

Though you and most everyone else seem fixated on how phenomenal experience becomes produced, that’s not what my own ideas have been developed to reduce. I only object to “informationalism” given that I consider it supernatural. My own models actually begin from the existence of phenomenal experience, and then reduce our nature in ways that our mental and behavioral sciences have failed to so far. If I were a talented (or slimy) salesperson then I’d probably not mention this entire supernatural business. Here I’d simply agree with the status quo in order to potentially help others understand and assess my ideas.

I realize that you’d give up informationalism if you understood that it actually conflicts with naturalism. But it shouldn’t be easy to let go of a mainstream position that you’ve invested a great deal in over the years. If it turns out that you’ll never believe that phenomenal experience by means of information through any medium at all is supernatural, then we’ll need to stop having these conversations. My own models apply even if “God himself” creates this stuff. So here we’d need to stick with psychology. But I haven’t given up yet!

LikeLiked by 1 person

Eric,

“If it turns out that you’ll never believe that phenomenal experience by means of information through any medium at all is supernatural, then we’ll need to stop having these conversations.”

What it will take to convince me is logic or evidence, but we’ve been looping on this for a while, and I suspect continuing to do so may not be productive. This seems like something we’ll simply have to agree to disagree on.

LikeLiked by 1 person

[going up some levels]

I’m not sure what your point is with regards to the profound interconnectivity of the cortex/thalamus. That doesn’t contradict anything I’m saying. I expect much local cortical-cortical interconnectivity. That would be the feed forward and feedback loops. I would also expect much distant connectivity, which provides control/attention mechanisms. But what I would not expect is the propagation of a single signal across multiple exchanges, because you would need error correction at each exchange, and the only error correction I can see working would require a direct connection back to the originating source at each connection. Thus everything would have to be directly connected to everything.

And yet to combine concepts you need to be able to reliably get different signals from their sources to a single place. That place will need to have direct connectivity to all the possible sources.

As for resilience, the hub architecture seems more resilient to me. You can take out a few spokes, but that doesn’t affect the other spokes. Assuming the semantic pointer architecture, taking out any part of the integral network will bork large swaths of capability. Say you have a hundred neurons, and each has 5 firing patterns (say 10hz,20hz,30hz,40hz,50hz). Any one signal will set each neuron to one of its 5 patterns. But if you take out one of the neurons, you can never reproduce any signal exactly. You can’t get a 100 dimensional vector from 99 neurons.

*

LikeLike

“I’m not sure what your point is with regards to the profound interconnectivity of the cortex/thalamus.”

Only that the physical topology doesn’t require or imply a strict hierarchy, or otherwise centralized system. That isn’t to say there aren’t hierarchies, but there are legions of them, overlapping or orthogonal to each other.

“because you would need error correction at each exchange”

I think you’re overestimating the reliability of any one connection. Often, in a balance with metabolic costs, neurons exist at the minimal signal to noise ratio. The brain seems to make up for this with redundancy and repetition. But noise remains a fact in the system.

“And yet to combine concepts you need to be able to reliably get different signals from their sources to a single place.”

What makes you think something like this happens? The urge to have a single place in the brain where it all comes together should be scrutinized. It would just put us in the position of needing to figure out how that place works. But all indications are the brain overall is that place, and the details of its operations are how such a place works. Looking for nested centers seems to be skirting with the homunculus fallacy.

“As for resilience, the hub architecture seems more resilient to me.”

And if the hub gets knocked out? You’re probably thinking about the thalamus, but remember a lot of inter-cortical wiring bypasses it, and I haven’t seen anywhere that it displays the kind of plasticity that would be needed. It’s role seems like more of a router than a storage system.

LikeLike

I appreciate your reluctance to consider a central theatre concept due to the homunculus problem, but my idea is more a theatre with an audience of tens or hundreds of thousands, or more, each of whom can potentially jump on stage so the others can see them, and each with their own entourage of supporters.

I think something like the combination of concepts happens in a central group of neurons because I’ve seen the demonstration and practiced with it myself. You can do this at home. Google nengo.ai.

Finally, I’m curious as to the router vs. storage system comment. I don’t see the hub as anything other than a router, just like a chalk board in a classroom is a router. It needs the “plasticity” of being able to temporarily hold a few of the gazillion possible signals, but only a few at a time.

By the way, I see new concepts getting “stored” by recruiting otherwise un-used or lesser used audience members. Put two concepts on the board, then call out to the audience “who wants to remember this?”

And just to be sure, I don’t see just exactly one chalkboard, but a handful. Maybe one for each cortical modality.

*

LikeLike

Okay, well maybe there’s less daylight between us that I thought. I guess for me, the crucial points with GWT is that any part (or just about any part) of the cortex can be conscious and any part can be, and usually is, unconscious. What makes something conscious is its causal effects throughout the system. A visual image of an apple doesn’t get passed around, just signals that cause activity related to that image in other regions. What makes that image conscious are a critical mass of the specialist regions responding to it.

I don’t have any issue with calling that regional activity, whether in one of the hubs or in the specialist region itself, semantic pointers. I’m less sure about calling the specialist regions unitrackers. Many undoubtedly are, but I’ve grown leery of accepting philosophical terms without reading about them. They always seems to come with commitments I’m uncomfortable with.

LikeLike

Eric and Mike, you guys talk about information a lot, but I have not seen what you mean when you talk about it. I know there are multiple concepts about information, but you should be using only one in a given discussion, and I would like to know which concepts those are.

For example, Eric has said (above)

“brains could potentially still produce consciousness by means of information alone”,

“models of how phenomenal experience become created, this might be through associated EM fields rather than information alone”

“if professionals in general believe that some manifestation of information itself is what makes this happen”

These phrases indicate that information can be a thing in itself, a thing alone. How does that work? Because that doesn’t make sense to me.

Mike has said, in the other thread,

“A region transmits information to the regions it’s connected to.”

“If certain highly connected regions become excited […] they propagate that signal, or compatible signals, in favor of others.”

Exactly what is transmitted or propagated?

Again, what is information, and what precisely distinguishes it from non-information?

*

LikeLiked by 1 person

Information, like energy, is a very useful concept that turns out to be devilishly hard to define. Here is one from a book I’ve been perusing lately. It’s based on Shannon information theory:

Note the emphasis on causal history. It’s why when people tell me the brain can’t be about information processing, that it has to be about the brain’s “causal powers”, I think they’re making distinctions without a difference.

LikeLiked by 1 person

Okay, any chance you wanna explain how “A region transmits reduction of uncertainty to the regions it’s connected to” ?

LikeLike

I’m going to suggest to you that the more pertinent Information Theory concept is Mutual Information. Reduction of uncertainty is a useful measure with regard to information channels and how much can be communicated through them. And while it sounds like “what the brain does”, it doesn’t give much clue as to how it does it.

I’m going to hope you have the time to google mutual information and then tell me what it’s really about, but I think it is easier to physically describe what’s going on, and shows a more direct connection with the causal history you mention.

*

[if you have the time. 🙂 ]

LikeLike

I can’t provide any kind of authoritative accounting of the information that gets exchanged between brain regions. Neuroscientists know a lot about the connections, and how and when regions have synchronized oscillations, implying binding between them, but most of the details aren’t known yet. The early sensory regions and late motor regions are pretty well understood, but the further from that things progress, the more abstract they become and the harder to correlate with stimuli or behavior.

I will say this. The receiving regions don’t receive a copy of the content from the source region, but signals that excite circuitry in the receiving region that are relevant to the content in the source region. For example, the hippocampus likely receives a signal from, say, V2, that excites some of its neurons in such a way that synapses are strengthened in its high plasticity circuits. If the strengthening is sufficient (from repetition or by a strong enhancing signal from affective regions), the hippocampus will later backpropagate that signal to the V2 circuitry that caused that synaptic strengthening, causing the original pattern to form again repeatedly, rehearsing it until a long term memory is formed with the synapses in the less plastic V2.

Based on the Wikipedia intro, mutual information sounds similar to Sterling’s concept of information. (They both come from Shannon’s work.) I didn’t get into the mathematics, so that’s about the limits of my insight.

BTW, other names for “reduction of uncertainty” is “recognition” or “prediction.” (Don’t hit me for using the p-word. 😮 )

LikeLike

I’m not looking for an authoritative account. I’m looking for a plausible mechanistic account. But I recognize you don’t have such an account in mind, so don’t worry about it.

In general, I’m thinking in terms of semiotics … symbols and signals and such. The concept of amount of information, reduction of uncertainty, makes no account of meaning/intention of that information, and so is only very loosely associated with recognition/prediction. Mutual information, on the other hand, is related to how meaning is created. My naive, non-mathematical, (very possibly mistaken) understanding is that the mutual information between two systems is essentially how much the state in one system system depends on the state of the other system. This dependence is not current, but is a dependence based on the causal histories of both systems. So a frog has a mechanism to recognize a black spot moving against a bright background. This mechanism has mutual information with current existing flies because the causal history of creating that mechanism involved interaction with actual past flies. If there had been no flies, or no fly-like things with the same value, there would not be said mechanism.

So what about “meaning”? Maybe meaning is the amount of mutual information of one system with respect to another system. So the recognition system in the frog that tracks dark spots has mutual information relative to flies, and the output signal (neurotransmitters) from that system has essentially that same mutual information. Those neurotransmitters thus can be a symbol for “fly is out there right now”. You could say the neurotransmitters “have” that information. One way to use this symbol is to transmit it to another system which responds to it with something useful, such as the process of launching a sticky tongue in that direction. This “transmission” happens (probably) by dumping the neurotransmitters into a synaptic cleft attached to the target system. Frogs probably do this.

Another way to use the symbol (neurotransmitters) is to dump them on multiple neurons that are in a system which then creates a unique pattern of outputs (neurotransmitters from multiple neurons). The entire purpose of the target system is to generate a unique pattern depending on the input system. This pattern would share the mutual information, and so, meaning, of the specific input system. This target system (a semantic pointer), could thus create unique patterns for many different input systems (pattern recognizers, predictors,unitrackers), and each unique pattern of output would have mutual information, meaning, with the respective input(s) (could be more than one at a time). This signal can be duplicated at multiple sites as long as the output pattern at any given site is unique to the input pattern(s). The output patterns (neurotransmitters) would share the mutual information with the original inputs.

Now transmission of signals has to deal with noise. Noise reduces mutual information. So any signal that needs to be transmitted across the brain has to either avoid noise or correct it. One way to reduce noise is to reduce transfer points. One long axon is better than five shorter axons. Another way to reduce noise is error correction. Digitalization helps (because it is easy to correct a value like 5.23 back to the nearest whole digest, 5), but that isn’t the only way. So for semantic Pointers there is a (neurologically plausible) mechanism to move the complex “unique” output pattern to the closest “known” output pattern.

So finally, I bring all this up because the “propagation” of Information you, Mike, seem to be suggesting seems to involve multiple transfer points, and the error correction available to semantic pointers seems unlikely to be available, or at least to be metabolically expensive, in all of those places.

Does this give you a better idea of what I’m looking/hoping for?

*

LikeLike

I don’t have much of an opinion on the semiotic aspects. They sound plausible, but my knowledge of them is pretty limited. I do wonder how they might tie in to Shannon’s information theory, which figures heavily in the understanding of why neurons work the way they do. I do agree that the frog’s reflexive tongue snapping of flies is due to the intersecting causal history of its evolution and that of flies.

On the multiple transfer points, I do think evolutionarily, there has to be some benefit to that more distributed framework. The empirical reality is that the thalamo-cortical system is profoundly interconnected, and brain scans correlate widespread activation of the cortex with conscious perception. (With all the caveats for possible report and post perceptual cognition confounds.) This arrangement goes all the way back to basal vertebrates. It wouldn’t have endured as long as it did unless it provided substantial advantages.

The obvious possibility, of course, is that it’s more resilient. People can sustain substantial injury to the cortex and still function well. Michael Gazzaniga points out that consciousness is very difficult to stamp out in the brain. It seems to function as long as there is some kind of quorum of functionality present.

And as I noted above, I actually think the semantic pointers work better if they’re distributed. That’s probably the way to think about what each receiving region has about the content of the source region. If the SPs were in some central place, that central place would have to have more plasticity than is probably realistic in a physical neural network. The only candidate might be the hippocampus, but patient HM remained conscious despite having had his hippocampi removed.

What makes you so attracted to the idea of a central storage area?

LikeLike

James,

I certainly agree that common definitions for terms like “information” are needed for associated discussions. I’m not sure if that’s been confounding Mike and I at the moment, though it should certainly be a good idea for me to present what I consider to be a useful definition.

As I’m using the “information” term, it refers to a relatively low energy type of input that a machine could process in an assortment of ways. For example there isn’t much difference between pressing one key on my computer versus another in an input sense to it. Slightly different information will be received, though once processed one can have extremely different implications. So to me these seem like low energy causal dynamics, unlike most everything else. This definition breaks reality into high energy causal dynamics, and thus non-computation, and the contrary low energy causal dynamics, which may be referred to as “information”.