This is a long video. The first hour or so features presentations on the Global Neuronal Workspace Theory by Stanislas Dehaene, Recurrent Processing Theory by Victor Lamme, Higher Order Thought Theory by Steve Fleming, and Integration Information Theory by Melanie Boly. It also has some brief recorded remarks from Anil Seth on Predictive Coding. (Fleming … Continue reading The Great Consciousness Debate: ASSC 25

Tag: higher order theory

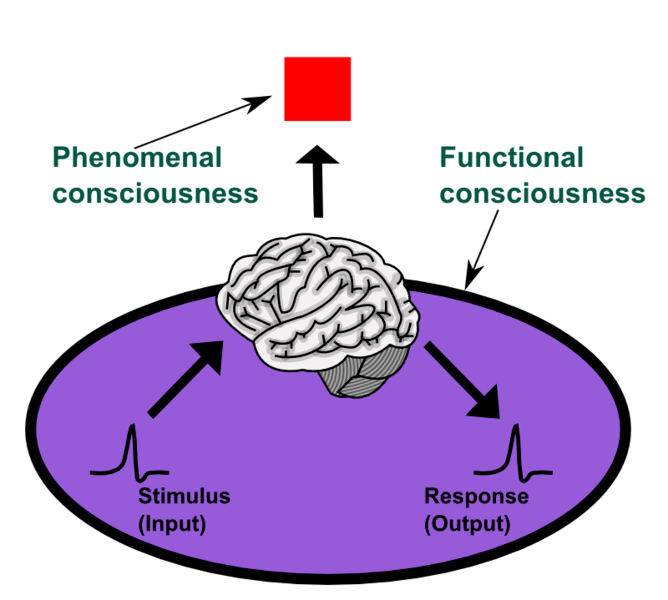

A perceptual hierarchy of consciousness

I've discussed many times how difficult consciousness can be to define. One of the earliest modern definitions, from John Locke, was, "the perception of what passes in a man's own mind." This definition makes consciousness inherently about introspection. But other definitions over the centuries have focused on knowledge in general as well as intentionality, the … Continue reading A perceptual hierarchy of consciousness

Stimulating the prefrontal cortex

(Warning: neuroscience weeds) This is an interesting study getting attention on social media: Does the Prefrontal Cortex Play an Essential Role in Consciousness? Insights from Intracranial Electrical Stimulation of the Human Brain. Ned Block is one of the authors. (Warning: paywalled, but you might have luck here.) The study looks at data from epileptic patients … Continue reading Stimulating the prefrontal cortex

The substitution argument

A preprint came up a few times in my feeds, titled: Falsification and consciousness. The paper argues that all the major scientific theories of consciousness are either already falsified or unfalsifiable. One neuroscientist, Ryota Kanai, calls it a mathematical proof of the hard problem. Based on that description, it was hard to resist looking at … Continue reading The substitution argument

The issues with higher order theories of consciousness

After the global workspace theory (GWT) post, someone asked me if I'm now down on higher order theories (HOT). It's fair to say I'm less enthusiastic about them than I used to be. They still might describe important components of consciousness, but the stronger assertion that they provide the primary explanation now seems dubious. A … Continue reading The issues with higher order theories of consciousness

Joseph LeDoux’s theories on consciousness and emotions

In the last post, I mentioned that I was reading Joseph LeDoux's new book, The Deep History of Ourselves: The Four-Billion-Year Story of How We Got Conscious Brains. There's a lot of interesting stuff in this book. As its title implies, it starts early in evolution, providing a lot of information on early life, although … Continue reading Joseph LeDoux’s theories on consciousness and emotions

Higher order theories of consciousness

I've posted on HOT (higher order thought theories of consciousness) before, but there's a new paper out covering the basics of these types of theories. Since first reading about HOT many months ago, the framework has been growing on me. The paper is not too technical and I think would be accessible to most interested … Continue reading Higher order theories of consciousness

Higher order theories of consciousness and metacognition

Some of you know, from various conversations, that over the last year or so I've flirted with the idea that consciousness is metacognition, although I've gradually backed away from it. In humans, we typically define mental activity that we can introspect to be conscious and anything else to be unconscious. But I'm swayed by the … Continue reading Higher order theories of consciousness and metacognition